Hvor dyktig er GPT-4 til å besvare eksamener og prøver på norsk, og hvilke implikasjoner kan dette ha for utdanning?

Hvordan håndterer GPT-4 flerspråklige og kognitive utfordringer når norsk blir brukt som eksamens- eller prøvespråk?

En rekke metaanalyser har sammenliknet tradisjonell undervisning og veiledning med smarte læringssystemer [på engelsk Intelligent Tutoring Systems, ITS], og har funnet at ITS kan prestere bra i en-til-en-undervisning av studenter. Likevel har eksisterende ITS ikke brukt norsk språk, og selv om større språkmodeller som GPT-4 ikke er utviklet som ITS, har de tydelige likhetstrekk. Hensikten med dette studiet er å se på hvordan GPT-4 håndterer flerspråklige og kognitive utfordringer med norsk språk brukt i eksamener og prøver, beskrivende og prosedyremessige spørsmål og ulike eksamen- eller prøvesammenhenger. Dette inkluderer eksamen i medisin, sykepleie, psykologi, tannpleie, en teoriprøve for militærtjeneste, den norske førerprøven, den svenske opptaksprøven til universiteter, den norske statsborgerprøven og en nasjonal lærereksamen i matematikk. En sentral oppgave er å undersøke hvilke implikasjoner dette kan ha for utdanningen. Funnene viser at GPT-4 håndterer flerspråklige utfordringer bra, og presterer særlig bra på norsk innen både akademiske eksamener og generelle IQ- og sosiale tester, bestående av både deskriptive og prosedyrespørsmål. Med en gjennomsnittlig presisjonsrate på 94,26 %, viser GPT-4s ytelse en høy grad av pålitelighet. Dette er delvis i overensstemmelse med den internasjonale kunnskapsbasen på enkelte områder, men studiet skiller seg ut fra denne ved å bekrefte at den også behersker norske eksamens- og prøvesammengenger svært godt. Slik har den evnen til å tilpasse seg språk og kultur, noe ordinære ITS ikke evner. GPT-4 (våren 2024) er også i stand til å utføre multimodale analyser av medisinske bilder på norsk og til å håndtere minoritetsspråk som samisk, som ITS vanligvis ikke kan. På tross av visse begrensninger, hevder dette studiet at slike funn bidrar til en bredere forståelse av GPT-4s flerspråklige og kognitive evner i både formative og summative vurderingssituasjoner. Av den grunn hevder dette studiet at det har vidtrekkende betydning for ulike sider ved utdanningssektoren.

How capable is GPT-4 at answering exams and tests in Norwegian, and what implications could this have for education?

How does GPT-4 handle multilinguistic and cognitive challenges when Norwegian is used as an exam or test language?

A number of meta-analyses have compared traditional teaching and tutoring with Intelligent Tutoring Systems (ITSs) and have discovered that ITSs can perform well in one-on-one tutoring for students. However, the existing ITSs have not handled the Norwegian language, and although large language models like GPT-4 are not developed as ITSs, they have clear similarities. The purpose of this position paper is to address how GPT-4 handles multilinguistic and cognitive challenges with Norwegian as an exam/test language, descriptive-procedural questions, and various exam and test contexts. This includes exams in medicine, nursing, psychology, dentistry, a theory test for military service, the Norwegian driving test, the Swedish university entrance test, the Norwegian citizenship test, and a national teacher exam in mathematics. A key concern is to investigate what kind of implications this could have for education. The results show that GPT-4 handles multilinguistic challenges well and performs remarkably well in Norwegian within both academic exam tasks and generic IQ and social tests, with elements of both descriptive and procedural questions. With an average precision rate of 94.26%, GPT-4’s performance demonstrates a high degree of reliability. This is partly consistent with the international knowledge base in some areas, but the study differs from this by confirming that it also masters Norwegian exam and test contexts very well. Thus, it has the ability to adapt linguistically and culturally, something conventional ITSs do not possess. GPT-4 (Spring 2024) is also capable of conducting multimodal analyses of medical images in Norwegian, and handles minority languages in Sami, which ITSs usually cannot do. Despite certain limitations, the position paper claims that such findings contribute to a broader understanding GPT-4’s multilinguistic and cognitive capabilities in formative and summative assessment contexts. Therefore, this position paper argues that it has significant implications for various aspects of the educational sector.

Introduction

With the launch of language models such as XLNet, BERT, ChatGPT, GPT-4, Gemini Advanced, Claude, and so on, we are facing a technological paradigm shift that may also influence and related areas in the future. Since the 1970s, research has explored ITSs and the potential of AI to provide personalized tutoring, inspired by Bloom’s (1984) well-known 2-sigma finding. Numerous meta-analyses comparing traditional teaching methods with ITSs have found that, under certain conditions, ITSs can effectively provide one-on-one tutoring. However, existing ITSs do not support the Norwegian language. While large language models like GPT-4, Gemini Advanced, and Claude are not specifically designed as ITSs, they share significant similarities. The knowledge base shows that these models have the potential to address certain educational challenges in both the education and healthcare sectors, opening up a broader discussion about their role in the future of education. GPT-4, an advanced language model developed by OpenAI, has proven capable in various English-speaking academic fields, exams, and tests (Ray, 2023; Agarwal et al., 2023; Brin et al, 2023; Brodeur et al., 2023; Chen et al., 2024; Deng et al., 2025; Goh et al., 2024; Hirunyasiri et al., 2023; Jin et al., 2024; Karthikesalingam and Natarajan, 2024; Kim et al. 2020; Liu et al, 2024; McDuff et al, 2023; Nori et al, 2023; Rajpurkar et al., 2020; Phung et al., 2023). However, the current state of knowledge lacks studies on how it performs in Norwegian in Norwegian-language exam and test contexts. This position paper, based on a case study, aims to evaluate how GPT-4 manages multilingual challenges with Norwegian as an exam/test language, focusing on descriptive-procedural questions and various exam and test contexts both within and outside academia. These contexts include exams in medicine, nursing, psychology, dentistry, a military theory test, the Norwegian driving test, the Swedish university entrance exam (SweSAT), the Norwegian citizenship test, and a national teacher exam in mathematics. A primary objective is to assess the reliability and generalizability of this type of AI in academic settings and in the Norwegian language. More specifically it focuses on whether GPT-4 is capable of answering various exams and tests that are primarily given in Norwegian in Norwegian educational and societal contexts, how reliable it is, and what implications this might have for both multilingualism summative and formative assessment elements within and outside academia.

Background

GPT-4 shares many similarities with ITSs, as it has proven to be a sparring partner and “tutor” in several academic contexts (Krumsvik, 2024a, b). At the same time, an ITS has distinct features, and in the following section, we will take a closer look at this.

Researcher Jamie R. Carbonell’s contributions to ITSs reached an important milestone within technological educational paradigms in 1970 and 1973 with his doctoral dissertation (Carbonell, 1970). Equally important was that his son, the world-renowned machine learning and language model researcher Jamie G. Carbonell, took over the “baton” from his father when he passed away in the same year (Collins et al., 1973; Carbonell et al., 1983). Carbonell’s extensive language model research has significantly contributed to the language model XLNet, which in 2019 performed substantially better than the BERT language model (Yang et al., 2019), leading to today’s ChatGPT and GPT-4. Less well known is that Carbonell was also strongly committed to the educational application of technology, and Professor Carolyn Penstein Rosé writes:

While Jaime is best known for his tremendous contributions and impact in the areas of Natural Language Processing, Artificial Intelligence, and Machine Learning, his impact has also been keenly felt in the area of technology-supported education [...] The idea of tutorial dialogue systems was conceived over a decade earlier, by both Jaime and his father, also named Jaime Carbonell, in their work on the landmark SCHOLAR system. (Rosé, 2015, p. 1)

Rosé also points out that father and son Carbonell were the architects behind significant intelligent technology innovations such as SCHOLAR and TEACHER, where the focus on situated learning and communities of practice was also part of the landscape. Researcher Etienne Wenger was part of this environment around SCHOLAR in the 1980s and published a book on artificial intelligence in 1987: Artificial intelligence and tutoring systems: computational and cognitive approaches to the communication of knowledge (Wenger, 1987). This book is considered by several sources to be “a milestone in the development of intelligent tutoring systems,” and Wenger continued with a dissertation on social learning, situated learning, and communities of practice (CoP) in 1990. Do the interaction possibilities with AI represent new forms of such communities of practice with students and citizens increasingly integrating them into established or new CoPs? It is too early to say for sure, but if AI can demonstrate linguistic and cultural adaptability, this can open up for handling some of the ground pillars of CoP. Because in the past couple of years, AI like GPT-4 has been seen as a cognitive technology that has similarities with an ITS and is capable of performing some cognitive aspects at nearly a human level. But even though it is a cognitive technology, it is naturally dependent on how we all weave it into our social, linguistic, and situated contexts, and thus it is important to investigate the dialectic between its cognitive abilities and the social construction of GPT-4 (related to the “social construction of technology” (SCOT), Pinch & Bijker, 1984). This position paper, therefore, addresses how the social construction of GPT-4 is situated in a Norwegian-language educational and societal context where the summative exam and test contexts have elements of both descriptive and procedural knowledge within and outside academia. This positioning is related to the fact that technological artifacts like GPT-4 can have different capabilities, meanings, or uses for different social groups, linguistic contexts, or communities of practice, and this echoes the aforementioned point by Carbonell, Rosé, Wenger, and others that technology can mediate cognition, but it can also mediate social learning in communities of practice (as with ITSs).

The recent launches of the aforementioned language models naturally prompt a closer examination of how this groundbreaking technology can address well-known summative and formative assessment challenges in both the education and healthcare sectors. Given the previous knowledge base within ITSs, one might wonder if these language models, in particular, will significantly impact upon various levels both within and outside academia. However, the knowledge base is limited, and this study seeks to explore what this could entail on a more concrete level, particularly in summative, but also in formative, assessment contexts. It is also anticipated that the same technology might contribute similarly within the healthcare sector, but here too, the knowledge base is currently limited in both Norwegian and international contexts. Thus, we are in many ways at a crossroads in AI development where much is discussed about its potential, but it also highlights the need for a better understanding of what GPT-4, for example, is capable of handling in various exam and test contexts, as addressed in this case study. This makes it particularly relevant to try to understand GPT-4’s capabilities in light of ITSs, since this technological paradigm has high relevance for both summative and formative assessment contexts, with roots dating back to the 1970s.

As mentioned, one of the pioneers in machine learning and language modeling, Jamie G. Carbonell (Carbonell et al., 1983), started his early career by taking over from his father, Jamie R. Carbonell, and his pioneering work in ITS in the early 1970s. Jamie G. Carbonell published one of his first studies within ITS (along with Allan Collins) (Collins et al., 1973), but later moved into machine learning and natural language processing (NLP), contributing significantly to the development of XLNet, BART, ChatGPT, and GPT-4, as well as, to some extent, ITSs.

Since the 1960s and 1970s, researchers have strived to develop computers and software as effective as human tutors. This effort gained momentum when the renowned educational researcher Benjamin Bloom made remarkable findings in the early 1980s regarding tutoring, challenging researchers and educators to find methods for classroom teaching as effective as one-on-one tutoring. Bloom and his doctoral students had discovered a significant learning effect from one-on-one tutoring compared to traditional classroom instruction with 30 students (Bloom, 1984). The effect size was so large (d = 2.0) that the difference between the student groups was comparable to several years of schooling. In his 1984 article, Bloom summarizes six studies on human tutoring, reported in the dissertations of Anania (in 1981) and Burke (in 1983) (Bloom, 1984). All six studies had effect sizes close to 2.0.

However, upon closer inspection, VanLehn (2011), in his comprehensive review of the tutoring knowledge base, found that human tutoring is usually not 2 sigma more effective than classroom instruction, as the six studies presented by Bloom (1984) might have led us to believe. Due to some methodological issues and how “experienced tutors” are defined, the replication of Bloom’s well-known 2-sigma finding has not yielded such high effect sizes. Instead, the average effect size found is closer to d = 0.79. This is still a significant effect size, and it suggests that Bloom’s 2-sigma article might be more related to mastery learning than human tutoring, which is important to consider.

The Relevance of ITS for GPT-4’s Capabilities

What, then, are ITSs today, which makes them highly relevant for GPT-4’s capabilities? The abovementioned state of knowledge shows that ITSs can provide personalized feedback based on students’ individual needs and learning progress, adapting instructional strategies to optimize learning outcomes, facilitating one-on-one interaction to mimic human tutoring and integrating various educational tools and resources to support learning. Given these capabilities, I examine if GPT-4’s language understanding and generative abilities position is a potential tool for enhancing both summative and formative assessment contexts. This position paper aims to explore these aspects, focusing on GPT-4’s effectiveness and reliability in Norwegian exam and test contexts, and its broader implications for education and healthcare sectors. But what is the relevance of ITS for GPT-4’s capabilities? There might be several common capabilities between ITSs and GPT-4, but ITSs have a longer history and a state of knowledge to build on, which distinguish ITSs from GPT-4 so far. More specifically, a metanalysis shows that an ITS has the capability to:

(a) Present information to be learned, (b) Ask questions or assign learning tasks, (c) Provide feedback or hints, (d) Respond to questions posed by students, or (e) Offer prompts to elicit cognitive, metacognitive, or emotional change.

By calculating inferences from student responses, either a persistent multidimensional model of the student’s learning status (such as subject knowledge, learning strategies, motivations, or emotions) is constructed, or the student’s current learning status is placed in a multidimensional domain model. Then, the student modeling functions identified in point 2 are used to tailor one or more of the tutoring functions identified in point 1 (Ma et al., 2014, p. 902).

As the knowledge base shows (Ray, 2023; Krumsvik, 2024a, b), GPT-4 is capable of performing similar tasks, even though it is not an ITS per se. But what do we know about the effectiveness of ITS capabilities? VanLehn (2011) finds in his meta-analysis that the effect of such adaptive learning technologies has an effect size of d = 0.58. VanLehn’s study is interesting as it reviews experiments comparing the effectiveness of human tutoring, artificial tutoring, and no tutoring. The tutoring systems were categorized based on the granularity (level of detail) in the user interface interaction into answer-based, step-based, and substep-based tutoring systems. Most ITSs have step-based or substep-based granularity in interaction, while most other tutoring systems (often called CAI, CBT, or CAL systems) have answer-based user interfaces.

There is a common perception that as the granularity of tutoring decreases, its effectiveness increases. Particularly compared to no tutoring, it is assumed that the effect sizes for answer-based tutoring systems, ITSs, and human tutors are d = 0.3, 1.0, and 2.0, respectively. VanLehn’s (2011) review did not confirm these assumptions. Instead, he found that the effect size for human tutoring was much lower: d = 0.79 than Bloom found (d = 2.0), and that the effect size for ITSs was almost as effective as human tutoring (d = 0.76). VanLehn noted as early as 2011 that “in short, we may soon see self-improving tutoring systems that monitor their own processes and outcomes in order to modify their tutoring tactics and make them more effective” (p. 213). Based on such findings, it is also important to investigate what GPT-4 is capable of in 2023/2024 in terms of tutoring, language understanding, and summative and formative assessment, as it possesses advanced machine learning technology that Kurt VanLehn and other ITS researchers did not have access to in 2011.

Nevertheless, other meta-analyses performed by Steenbergen-Hu and Cooper (2014) showed almost no difference in students using ITS compared to the control group that did not use it in mathematics. Another meta-analysis by Steenbergen-Hu and Cooper (2014) found an effect size of g = 0.37 for ITS use in higher education. In a meta-analysis from 2014, Ma et al. showed that adaptive, ITSs led to better performance than teacher-led large class instruction (g = 0.42), non-ITS instruction (g = 0.57), textbooks, and homework (g = 0.35). The meta-analysis also showed that the teacher is central in most contexts where an ITS was applied and had performance-enhancing effects.

Based on the aforementioned meta-analyses, one might wonder, how effective are ITSs in general? Kulik and Fletcher’s (2016) comprehensive meta-analysis found that compared to traditional instruction, ITSs had an effect size of g = 0.66, indicating that ITSs can be very effective AI tools. Students receiving intelligent tutoring performed better than students from traditional classes in 46 of the 50 (92 percent) controlled evaluations, and the improvement in performance was significant enough to be considered of substantial importance in 39 of the 50 studies (78 percent). The median effect size (ES) in the 50 studies was 0.66, considered a moderate to large effect for social science studies. This corresponds to an improvement in test performance from the 50th to the 75th percentile.

Brasiel et al. (2016) investigated the two ITSs, ALEKS and i-Ready, where the effect sizes ranged from d = 0.8 to d = 0.62 (i-Ready). Fang et al. (2019) found that ALEKS was particularly effective when used as a supplement to traditional instruction (g = 0.43). In a systematic review by Guo et al. (2021), it was revealed that conceptually there is no clear boundary between ITSs and related concepts such as computer-assisted learning (CAL), computer-assisted training (CAT), and computer-assisted instruction (CAI), but the key difference between ITSs and CAI was that solutions provided by ITSs were generated in real-time and not pre-generated. Also, if much of the students’ learning occurs during homework, replacing paper-based homework with an ITS can have a large effect size. For example, in four year-long evaluations, the learning gains of students using a step-based physics tutoring system were d = 0.61 higher than the learning gains of students doing the same homework on paper (VanLehn et al., 2005; VanLehn, 2011).

Another recent meta-analysis reports a substantially larger effect size (g = 1.31) for generic language models, as well as large effect sizes for domain-specific AI, such as ITSs (g = 1.07) (Tlili et al., 2025).

How then do large language models like ChatGPT and GPT-4 perform in ITS-related domains? Phung et al. (2023) find that GPT-4 outperforms ChatGPT and comes close to the performance of human tutors in tutoring. Pardos and Bhandari (2023) find that seventy percent of the hints given by ChatGPT in artificial tutoring systems were of good quality, creating favorable conditions for positive learning outcomes. Hirunyasiri et al. (2023) investigated whether GPT-4 could identify feedback to tutors compared to human reviewers based on five criteria. They found that GPT-4 performed well in identifying instances where the tutor gave specific and immediate praise but performed weaker in identifying more in-depth and complex praise. Other studies show lower effect sizes, and much seems to be related to how this technology is integrated into the teacher’s instructional process. Krumsvik (2024 a, b) finds that GPT-4 has the capability to contribute to both formative and summative learning processes, but this depends on how students and other users master “chain of thought prompting.”

From a learning analytics perspective, the above findings have implications—not only for students, as seen above, but also for tutors. In both Bloom (1984) and other studies, it is often assumed that one is a good tutor if one has a lot of experience and has been doing this for a long time. However, even though tutors in the education and healthcare sectors practice tutoring for 10-15 years, learning analytics often reveal that they do not undergo systematic evaluations and analyses of their tutoring to improve it. This contrasts with professional athletes, musicians, chess players, surgeons, and others, who frequently receive systematic feedback on what they succeed and fail in, something many tutors do not. For example, it is likely that few tutors record videos, analyze their performances, and look for opportunities to improve. Thus, one can argue that even though the tutors in these Bloom studies were called experts and had many years of tutoring experience, they might not be as “expert” as a person could be given ten years of constant feedback and reflective practice (VanLehn, 2011). This aligns with our own study, which found that video analysis of teachers’ instruction, along with feedback from colleagues, provided a basis for improving both teaching and tutoring over time (Smith & Krumsvik, 2007).

This abovementioned literature review and our former studies (Krumsvik, 2024a, b) generated a number of explorative questions and reflections around this topic: How effectively can GPT-4 handle multilingual challenges, particularly in Norwegian, across both academic exam tasks and general IQ and social tests? What is its precision rate, and how reliable is its performance in these contexts? Does GPT-4’s ability to manage descriptive and procedural questions align with international findings, and how well does it adapt to Norwegian exam and test contexts? Can it demonstrate linguistic and cultural adaptability that conventional ITSs lack? Furthermore, what are the limitations of GPT-4 in these contexts, and how does a case study contribute to our understanding of its multilingual and cognitive capabilities in summative assessments? Can GPT-4 serve as an effective tool in formative assessment contexts, and how well does the case study design perform in research environments characterized by rapid development? These preliminary questions and reflections can be summarized in one main research question we will examine in this position paper:

How capable is GPT-4 of answering selected Norwegian exams and tests, and what potential implications might this have for formative and summative assessment in educational sciences and healthcare education?

Methods

is based on a case study which is exploratory and intrinsic (Stake, 1995, 2006). I conducted a cumulative data collection and analysis process (Creswell & Guetterman, 2021), based on performance on nine exams and tests inside and outside academia in Norway where (1) where I applied chain-of-thought prompting with the exact same wording as in the exam and test text in Norwegian. Furthermore, in the supplementary data collection (blue arrows), I integrated the research questions into the dialogue (A) of the results from 1, and interacted with GPT-4 around the preliminary findings. Finally, further digital fieldwork (B) was conducted to check for possible biases and misinterpretations, ongoing fine-tuning of GPT-4 (by OpenAI), as well as in light of the aforementioned current state of knowledge on this topic.

Note. The yellow arrow shows the main data source, and the blue arrows show the supplemental data in this article.

The main test period was carried out from March 25, 2023, to August 5, 2023, and the nine exams and tests were from different areas both inside and outside academia to check GPT-4’s ability to handle different contexts. Four of the exams were full-scale exams, while the five other exams and tests were based on random selection (two sub-tasks in one test had to be omitted due to task drawings that GPT-4 could not perceive and “see”). All the exams and tests were in the Norwegian language (except the Swedish SweSAT) and consisted mainly of text questions. Scoring of all the exams was based on the grading guidelines (sensorveiledning) derived from different sources. Interaction with GPT-4 was conducted based on the nine exams and tests questions, which were posed to GPT-4 using chain-of-thought prompting and responses were recorded (each response was considered final).

Data analysis, step 1, was based on GPT-4’s performance on the nine exams and tests selected randomly from previous exam sets. The supplemental data was collected from August 2023 to April 2024 and consisted of comprehensive interactions with GPT-4 and digital fieldwork (described above).

Results

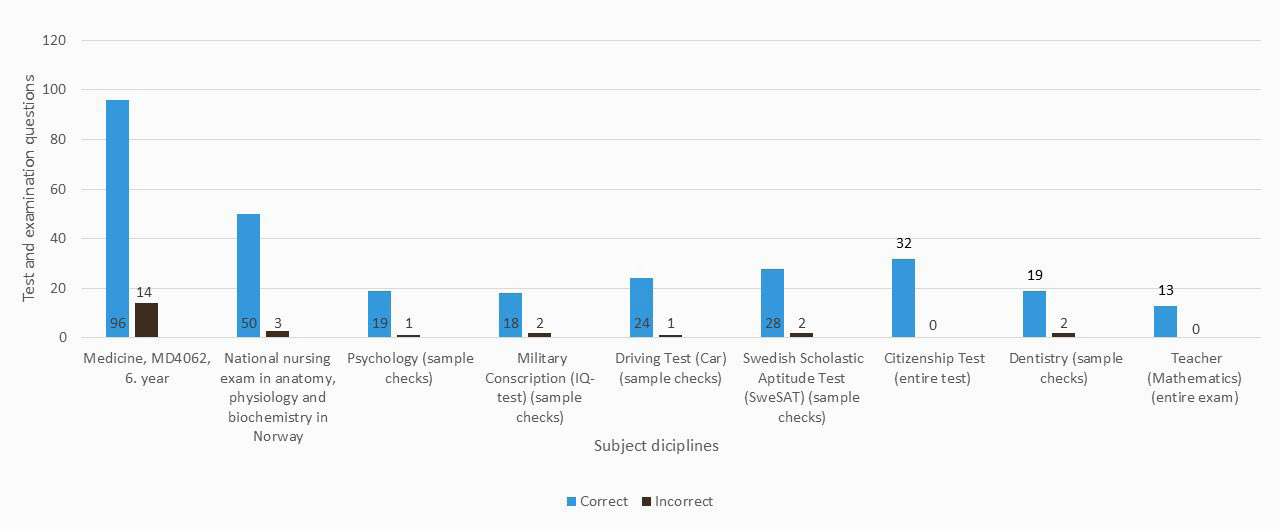

The tests were conducted from March 20 to August 10, 2023, and the numbers on the bar charts illustrate the number of questions (correct: blue, incorrect: yellow). Explanation: Sample checks: When only sample checks of the exam/test were performed. Entire exam: When a test of the entire exam/test was conducted. * Two sub-tasks had to be omitted due to a task drawing that GPT-4 cannot see (thus, 13 out of 15 sub-tasks were completed). ** This test currently consists of 36 questions, but the version publicly available and tested consisted of 32 questions.

Table 1: GPT-4’s performance on exams and tests inside and outside academia in Norway

|

Field |

Correct (%) |

Incorrect (%) |

|---|---|---|

|

Medicine (entire exam) |

87.3 |

12.7 |

|

Nursing (entire exam) |

96.2 |

3.8 |

|

Psychology (sample checks) |

95 |

5 |

|

Military Conscription (IQ-test) (sample checks) |

90 |

10 |

|

Driving Test (Car) (sample checks) |

96 |

4 |

|

Swedish Scholastic Aptitude Test (SweSAT) (sample checks) |

93.3 |

6.7 |

|

Citizenship Test (entire test) |

100 |

0 |

|

Dentistry (sample checks) |

90.5 |

9.5 |

|

Teacher education (Mathematics) (entire exam) |

100 |

0 |

Table 1 shows that the average precision rate of 94.26% indicates that GPT-4 performs very well across various fields within and outside academia, spanning a relatively broad range of exams, tasks, tests, and domains. It demonstrates multilingual and cognitive skills at a high level and GPT-4 generally has capabilities comparable to the human level in such exam and test contexts. While all nine exams/tests have an element of descriptive knowledge (knowing that), the medical exam includes a number of exam tasks that lean towards procedural knowledge (knowing how) (Anderson, 2005) as they are formulated as patient cases (and not factual knowledge per se). Additionally, about 10 percent of the 110 exam tasks contain image illustrations related to the tasks (X-rays, images of skin rashes, organ images, etc.), which are helpful for students in addition to the task text itself (which are often small case descriptions about patients). This multimodality could not be “seen” or interpreted by GPT-4 in spring 2023 (only in the retesting, see B1), and thus, for these tasks, it could only respond based on text descriptions. Nevertheless, we see that GPT-4 achieves 87.5% correct answers (96 out of 110) on this exam, and when looking at the detailed and reasoned responses it provides, this shows a good academic level. Below I present a dialogue (A) with GPT-4 regarding these results.

A. 1. Dialogue with GPT-4: When comparing GPT-4 with conventional Intelligent Tutoring Systems (ITS) in light of the results above, what kind of similarities and differences are there between ITS and GPT-4?

“When comparing GPT-4 with conventional Intelligent Tutoring Systems (ITS), there are both similarities and differences based on how GPT-4 manages to answer these 9 exams in Norwegian.

Similarities

- Adaptivity: Like ITS, GPT-4 can adapt to various types of questions and contexts, providing responses that are tailored to the specific requirements of each exam.

- Feedback Mechanism: Both GPT-4 and ITS can offer feedback and hints based on the input they receive, helping users understand their mistakes and learn from them.

- Coverage of Knowledge Areas: Both systems can handle a wide range of subjects and question types, from factual knowledge to more complex procedural knowledge.

Differences

- Multimodality: One key difference is GPT-4’s current limitation in handling multimodal inputs (e.g., images, diagrams), whereas some ITS can integrate and respond to visual content effectively. This limitation affected GPT-4’s performance in the medical exam with image-based questions during the spring 2023. However, the retesting of this medical exam during the spring 2024 showed that GPT-4 was able to handle this because of improvement of the model.

- Language and Cultural Adaptation: GPT-4 demonstrated a strong capability to understand and respond to questions in Norwegian, showing linguistic and cultural adaptability that many conventional ITS might lack, especially those not specifically designed for Norwegian.

- Generalization vs. Specialization: GPT-4, as a general AI model, is capable of handling a wide variety of tasks and subjects, while conventional ITS are often specialized and finely tuned for specific educational contexts or subjects.

- Learning and Improvement: GPT-4 can benefit from ongoing updates and improvements in the underlying model, potentially enhancing its performance over time. Conventional ITS may require more manual updates and reprogramming to improve or expand their capabilities.” (GPT-4, Open AI).

A.2. Dialogue with GPT-4: How do you, as GPT-4, manage to handle Norwegian-language exams?

“I am capable of answering challenging exam questions in Norwegian due to several key factors related to how I, as a large language model developed by OpenAI, am constructed and trained. Here are some of the main reasons:

- Extensive Training on a Large Dataset: I am trained on a vast amount of text data that includes information from a wide range of subjects and languages, including Norwegian. This encompasses scientific articles, books, websites, and other materials that provide a broad coverage of knowledge.

- Natural Language Processing (NLP): I use advanced techniques in natural language processing (NLP) to understand and generate human-like text. This allows me to comprehend complex questions, draw relevant conclusions, and formulate answers in a coherent and understandable manner.

- Multilingual Model: I am designed to handle multiple languages, which includes understanding and generating text in Norwegian. This means I can grasp nuances, grammar, and context in different languages, including Norwegian.

- Access to Broad and Updated Knowledge: Through my training data, I have access to a wide range of up-to-date information across many fields. This includes medical, technological, legal, and social sciences topics, enabling me to answer specialized and complex questions.

- Continuous Learning and Improvement: My development includes continuous updating and improvement based on feedback and new data. This allows me to enhance my abilities over time and adapt to new types of questions and issues.

- Ability to Draw from Multiple Sources: When answering questions, I can draw information from multiple sources simultaneously, allowing me to provide more accurate and well-founded answers, even to complex exam questions.

Practical Example

When asked a medical exam question in Norwegian, I use my knowledge from medical texts, articles, and other relevant sources in Norwegian to understand the question and formulate an answer that is both accurate and understandable.

Summary

My ability to answer challenging exam questions in Norwegian is due to a combination of extensive training on multilingual datasets, advanced NLP techniques, access to broad and updated knowledge, and the ability to continuously learn and improve. This makes me a powerful tool for assisting with a variety of complex and specialized tasks.” (GPT-4, OpenAI, 2024)

In light of some multimodal shortcomings of GPT-4 during the spring 2023, the digital fieldwork conducted a retest of GPT-4 during the spring of 2024, and below I will present some of the findings from this part.

B.1. Digital Fieldwork: Retesting GPT-4 in Spring 2024

In the digital fieldwork, the medical exam was retested in the spring of 2024, during which GPT-4 was equipped with a multimodal plugin, enabling it to perform multimodal analyses of the 10 patient cases that included images. During the retesting, images were added to each of these 10 patient cases along with the accompanying text, and GPT-4 correctly answered 70% of these tasks (7 out of 10 patient cases). Unlike medical students taking this exam who receive four answer choices for each question (multiple choice), this part was blinded for GPT-4, and it was given no options to choose from. Despite this, GPT-4 managed seven out of ten, provided detailed explanations for each answer, and in the three tasks where GPT-4 answered incorrectly, it corrected itself when confronted with the examiner’s guidance. As part of this digital fieldwork, I asked GPT-4 the following question:

Why are you able to give the correct answer to 7 out of 10 images of patient injuries you analyze on a medical exam?

“My ability to give correct answers to 7 out of 10 images of patient injuries on a medical exam can be explained by several factors related to training data, algorithms, and natural language processing (NLP). Here is a detailed explanation:

1. Extensive Training on Medical Data:

- Datasets: I am trained on a large amount of medical data that includes images, descriptions, diagnostic information, and treatment protocols. This encompasses both textual information and annotated medical images that provide context to the injuries.

- Data Variation: The dataset includes various types of injuries, diseases, and clinical presentations, enabling me to recognize and compare new cases with known patterns.

2. Natural Language Processing (NLP):

- Image Description: For images described textually, I can use NLP techniques to interpret and understand the description, which helps in providing a correct answer.

- Context Understanding: NLP helps in understanding the context around an image, including symptoms, patient history, and other relevant data, contributing to more accurate diagnoses.

3. Pattern Recognition:

- Pattern Recognition: I use advanced algorithms for pattern recognition trained to identify visual features and anomalies typical of various injuries and diseases.

- Algorithmic Approach: The use of algorithms that can analyze images in a way similar to how humans do, including identifying visual markers and anomalies.

4. Integration of Multisource Information:

- Combining Data: I can integrate information from multiple sources, such as clinical data, patient history, and visual findings, to arrive at a diagnosis.

- Interdisciplinary Approach: The ability to draw on knowledge from multiple medical disciplines to improve accuracy.

5. Knowledge of Medical Principles:

- Medical Knowledge: Through my training, I have learned fundamental medical principles, including anatomy, pathophysiology, and treatment protocols, which I can apply to analyze medical images.

- Updated Data: I am trained on up-to-date medical data, allowing me to provide relevant and current answers.

6. Learning from Mistakes and Feedback:

- Continuous Improvement: Based on feedback and evaluations, I can improve my algorithms and data models to increase accuracy over time.

- Adaptation: Learning from past mistakes and successes allows me to adjust my approach for better performance in future analyses.

My ability to give correct answers to 7 out of 10 images of patient injuries on a medical exam result from extensive training on medical data, advanced NLP techniques, pattern recognition, integration of multisource information, solid medical knowledge, and continuous learning. This enables me to analyze medical images effectively and provide accurate diagnoses based on available information.” (GPT-4, OpenAI, 2024)

B.2. Short sample checks of GPT-4 capability to handle the Norwegian minority language Sami and one of the two official written standards of the Norwegian language, New Norwegian

This analysis was conducted in March 2024 and explored GPT-4’s ability to handle translations involving the Norwegian minority language, Sami, and one of the two official written standards of Norwegian, New Norwegian (Nynorsk). The study included ten sample checks (see Appendix 1 for an example) to evaluate GPT-4’s proficiency in translating Sami into A) English and B) Norwegian. Additionally, ten sample checks assessed GPT-4’s ability to translate New Norwegian into A) English and B) Norwegian Bokmål. The results showed that GPT-4 performed well in all these translation tasks, demonstrating high accuracy and fluency. This indicates that GPT-4 is effective in processing and translating both the Sami and New Norwegian languages. The results provide insights into the model’s performance and its effectiveness in processing minority languages as Sami and the minority of the two written standards of Norwegian, New Norwegian.

Summary of phase A and B

When comparing GPT-4 with conventional ITSs, there are notable similarities and differences. Both systems are adaptive, capable of providing feedback, and covering a wide range of knowledge areas. However, GPT-4 initially struggled with multimodal inputs like images but improved with updates, whereas some ITSs handle these effectively. GPT-4 demonstrates strong adaptability to Norwegian, which many ITSs lack. Additionally, GPT-4, as a general model, can handle diverse tasks, while ITSs are often specialized for specific educational contexts. GPT-4 benefits from ongoing updates, unlike ITSs, which usually require manual updates.

GPT-4 can handle challenging Norwegian-language exams due to extensive training on large datasets, advanced natural language processing techniques, and being designed as a multilingual model. It has access to broad and updated knowledge and continuously improves its abilities. When answering questions, it draws information from various sources, enabling accurate and well-rounded responses.

In 2024, GPT-4 was retested with a multimodal plugin that enabled it to analyze medical images. It correctly answered 70% of image-based tasks, provided detailed explanations, and self-corrected when needed. This performance is attributed to extensive training on medical data, advanced NLP techniques, pattern recognition, integration of multiple information sources, solid medical knowledge, and continuous learning.

In 2024, we conducted 10 short sample checks of GPT-4 capability to handle the Norwegian minority language Sami and one of the two official written standards of the Norwegian language, New Norwegian. The results showed that GPT-4 performed well in all these translation tasks, demonstrating high accuracy and fluency. This indicates that GPT-4 is effective in processing and translating both Sami and New Norwegian languages.

Overall, GPT-4 shows advanced capabilities in understanding and generating accurate responses across diverse and complex tasks, particularly in Norwegian-language exams and medical image analysis.

General summary of phase 1, A & B

The results indicate that in the medical exam, descriptive and procedural knowledge are in a dialectical relationship, as GPT-4 cannot answer the exam questions without possessing both types of surface and deep knowledge. Exams in nursing, psychology, dentistry, the Swedish Scholastic Aptitude Test (SweSAT), and teacher education also exhibit this combination to some extent. Other tests, like the military conscription test (IQ test), also include this combination but are more oriented towards general knowledge. It can be added that a smaller version than GPT-4, GPT-3 managed 73 out of 80 tasks in the SweSAT in 2022 (Medium, 2022), and many were surprised at how well it handled abstract metaphors. The driving test and citizenship test primarily assess descriptive knowledge. From this, we can see that the GPT-4’s descriptive and procedural abilities can also be related to Anna Sfard’s (1998) two metaphors for learning (acquisition metaphor and participation metaphor) but in a more situated context within school or academia, not limited to a specific exam or test situation inside and outside academia.

Overall, GPT-4’s scores across the nine different exams/tests demonstrate its ability to handle multilinguist and relatively complex Norwegian-language questions at times at a high academic level. This suggests a need for a broader epistemological discussion about new forms of AI-generated communities of practice (CoP) and whether GPT-4 can be considered a highly capable dialogue partner and tutor for Norwegian-speaking students preparing for this form of summative assessment (school exams). These findings are consistent with our tentative knowledge summaries (Krumsvik, 2023) and case studies (Krumsvik, 2024a, b), which find a similar trend across various English-language exams/tests internationally (Ray, 2023).

Discussion

The results from testing GPT-4 on various Norwegian-language exams and tests on multilingual and cognitive capabilities in such contexts, aligns with our previous findings (Krumsvik, 2023; Krumsvik, 2024a, b). GPT-4’s cognitive capabilities also align with, for example, the pre-print from Bubeck et al. (2023), Ray (2023) and Deng et al. (2025). Particularly notable in this study is GPT-4’s multilinguistic abilities and performance on Norwegian-language exams, despite the model primarily being trained on English-language data. This indicates a good ability to generalize knowledge across languages. Exams such as medicine, nursing, and psychology include both descriptive and procedural knowledge, requiring deeper understanding and processing. GPT-4’s ability to handle such complex tasks suggests that the model can go beyond mere memorization of facts and engage in more sophisticated cognitive processing. Such findings are supported within ITSs by VanLehn (2011), who emphasizes the importance of deep learning in effective tutoring systems. VanLehn (2011) points out that human tutoring has an effect size of d = 0.79, while ITSs show a similar effect size of d = 0.76 in his study, and in the Tlili et al. (2025) metanalysis this is g = 1.07.

The results of our study suggest that GPT-4, as part of an ITS, can offer a comparable level of support as human tutors. This is especially relevant in light of previous research showing that traditional classroom instruction often does not reach the same level of effectiveness as one-on-one tutoring (Bloom, 1984). At the same time, it is important to note that, according to Ma et al. (2014), there are some distinctive features of ITSs (as mentioned earlier) that GPT-4 does not inherently possess. These are especially oriented towards calculating inferences from student responses, constructing multidimensional models of the student’s learning status, and placing the student’s current learning status in a multidimensional domain model. This can be partially achieved by establishing a domain-specific “chatbot within the chatbot” by integrating a training basis on top of GPT-4 and simultaneously embedding a script in this chatbot, tuning it more specifically towards having an ITS-related functionality (along with established ITSs). Integrating GPT-4 in ITS-related areas can potentially expand tutoring opportunities in the educational sector. With GPT-4’s ability to generate educational content, analyze student input, and offer real-time feedback, GPT-4 can significantly enhance tutoring opportunities and AI-CoPs (AI communities of practice) both for students who have Norwegian as their native language, but also for foreign students who may interact with GPT-4 in English about Norwegian-language exam questions, tests, and so on. This can be a good sparring partner for such language thresholds and be a valuable supplement for foreign students in addition to other measures and conventional tutors in higher education.

The implications from this position paper are that as artificial intelligence like GPT-4 approaches the capabilities of human tutoring in such summative exam and test contexts, it has potential for applications both inside and outside academia. The unique aspect of language models like GPT-4 is their ability to handle Norwegian contextual, multilinguistic “language games” (Wittgenstein, 1997), making it capable of addressing inquiries from both students, doctoral candidates and young people preparing for the driving test, showcasing versatility far beyond earlier language models and chatbots. The fact that GPT-4 understands Norwegian metaphors, satire, and tacit knowledge opens possibilities across all nine exam and test contexts examined in the study. This is particularly important considering that Norwegian is a small language globally, and it is crucial for students and citizens to manage Norwegian when they become professionals, as Norwegian is the primary language in almost all employment sectors in Norway. GPT-4’s abilities in the minority language Sami also creates new possibilities for the Sami population in Norway (which consists of appoximately 100,000 Sami people and where approximately 30% of these speak Sami).

Such findings indicate that Vygotsky’s concept of the zone of proximal development (ZPD) (Vygotsky, 1978) could be revitalized, with his notion of a mediating artifact becoming a reality as an active tool, evolving into a language model with human-level abilities in some areas—an “AI tutor” in the form of GPT-4. The well-known learning concept of “scaffolding” (Wood et al., 1976) is also relevant based on the findings in the study and has the potential to be realized both within and outside academia to a greater extent than before. This is especially significant for vulnerable minority-language students from low socioeconomic backgrounds, where language models might act as a catalyst for realizing adapted education and AI-CoPs more than before.

Based on the findings and used thoughtfully, it also appears that Bandura’s (1986) concept of self-efficacy and Deci and Ryan’s self-determination theory could gain new dimensions, as experiences of mastery and student autonomy are strengthened with GPT-4 as a sparring partner, available at any time, and providing continuous feedback (Ryan & Deci, 2020; Hattie & Timperley, 2007). From this perspective, the study’s findings suggest that Bloom’s (1984) 2 Sigma challenge could benefit from large language models, offering tutoring opportunities not seen before, with GPT-4 capable of mastering both generic and specific knowledge across nine Norwegian exam and test contexts.

Conclusion and implications

The research question in position paper focused on how capable GPT-4 is of answering exams and tests in Norwegian and what implications could this have for education. Despite some limitations, the position paper confirms that GPT-4 has significant multilinguistic and cognitive capabilities, making it a valuable tool both inside and outside academia as a sparring partner in various exam and test contexts. With an average precision rate of 94.26%, the model demonstrates the ability to answer both more factual questions and more complex and varied questions in a manner comparable to human performance in such contexts. Given that GPT-4 also masters Norwegian well, it is particularly relevant in the nine exam and test contexts studied, which are primarily in Norwegian, targeting the Norwegian educational and societal context (the exception being the SweSAT, where GPT-4 also shows strong proficiency in Swedish). GPT-4’s good performance in Norwegian as early as 2023 is probably because medical knowledge is globally standardized and largely overlaps with the English-language material the model was trained on. It handles written Norwegian and technical terminology well, and many exam tasks require pattern recognition rather than deep reasoning—an area where GPT-4 excels. The exams were highly standardized and not dependent on Norwegian-specific legal or cultural context, allowing the model to apply its global knowledge base effectively in Norwegian. This suggests that large language models can play a complementary role in various tutoring contexts in higher education, in developing AI-CoPs and in the further development of ITSs.

The implications of the position paper also highlight its potential to fill the gaps identified by research as crucial for student learning processes. GPT-4 can contribute to scaffolding, one-on-one tutoring, Vygotsky’s proximal development zone, and real-time feedback. It is multilingual and also available around the clock, regardless of who you are, where you live, who your parents are, or whether you are a resourceful or less resourceful student.

However, GPT-4 lacks the ability to model a student’s knowledge development over time, requiring integration with other systems to provide more targeted tutoring and formative assessment to students (this can be achieved by integrating scripts, APIs, domain-specific registry data, etc.). Further development of GPT-4 (“chatbot within the chatbot,” e.g., https://chatgpt.com/g/g-T6wJuA5tr-writing-the-synopsis-companion-for-phd-candidates) tailored to domain-specific tutoring purposes could potentially reduce some of the “Dunning-Kruger” effect (Kruger & Dunning, 1999) in higher education, by giving students a more calibrated understanding of what they actually know and do not know, thus supporting metacognitive aspects of learning.

Our findings align with several recent meta-analyses of ITSs in the education sector, which reveals that ITS and generic language models have a considerable effect on learning performance (g = 1.07 and g = 0.7) ) (Tlili, et al. 2025; Deng et al., 2025). Put simply, when an educational tool or pedagogical intervention yields an effect size above g = 0.7, it is not merely a statistical figure—students are likely to notice its impact in practice. However, these meta-analyses did not include primary studies conducted in Norwegian, and my study addresses this gap in the current body of knowledge.

In summary, such language models are intellectual artifacts mastering contextual language games (Wittgenstein, 1997), representing a significant leap from earlier language models, ITS and chatbots. But it requires mastery of the granularity in prompts based on “chain of thought” prompting, which often shows variability among students, citizens, and learners. This illustrates that such digital competence will become increasingly important both inside and outside academia in the coming years to fully exploit the potential of such language models for both formative and summative assessment contexts in the future. With such reservations, one implications of the findings might be that non-Norwegian-speaking students, university staff, and citizens in general who wish to learn Norwegian now have a competent “Norwegian teacher” by their side with GPT-4. However, AI is at the same time and “ethical minefield” and the study also underscores the need for vigilance and careful implementation to mitigate biases and ethical issues associated with AI use in education.

Methodological considerations – strengths and weaknesses

As mentioned, the case study the position paper builds on has several strengths and limitations. Given that the AI field is a “moving target” developing very rapidly, case studies with triangulation, cumulativeness, and the possibility for retesting over a year can be an effective design in such research settings. The ability to track progress over an entire year provides valuable insights into the model’s ability to adapt and improve over time. Although this case study has several strengths, there are also important limitations that need to be considered related to the methodological choices made. For instance, a full-scale testing of all nine exams and test areas was not conducted. Instead, a series of sample checks were carried out in this case study in five of the test areas, and such sample checks have several limitations that should be noted. Additionally, although the study followed the development over one year, this may still be a relatively short period to fully understand the long-term effects and improvements in AI models. Longer follow-up periods could provide more comprehensive insights. The results from the case study cannot be generalized and derive their strength from the depth perspective. The selected sample also has its biases. These limitations highlight the need for caution in interpreting the results and the importance of further research to validate and extend the findings.

This study also has limitations around key distinctions between current-generation large language models (LLMs) such as GPT-4 and the newer, more advanced o3-series models. This o3 model performed very well on the ARC-AGI (Abstraction and Reasoning Corpus), which is a challenging test designed to measure general intelligence—that is, the ability to solve entirely new problems without having encountered similar ones before (Chollet, 2024). While GPT-4 excels at knowledge reproduction within standardized domains—including high performance on Norwegian medical exams—its capabilities are primarily based on statistical pattern recognition and probabilistic next-token prediction across a vast corpus of pre-existing content. In contrast, o3 introduces a significant shift toward adaptive reasoning by performing test-time search over a space of reasoning strategies—so-called Chains of Thought (CoT)—allowing it to synthesize novel solutions rather than retrieve known ones. This represents a departure from models augmented with Retrieval-Augmented Generation (RAG), which rely on fetching external knowledge, whereas o3 leverages internal recombination and programmatic reasoning. As such, o3 models reflect an emerging paradigm of symbiotic intelligence where AI systems begin to approximate aspects of fluid reasoning beyond the limits of memorized knowledge. This case study has not undertaken a thorough and in-depth analysis of GPT-4’s linguistic capabilities in Norwegian on a large scale. Instead, it has examined this only at a more general level, related to exams, tests, and assessments. These assessment formats operate within specific linguistic frameworks, which enable generative AI to more easily recognize language patterns and thus perform better than it might outside such contexts. This constitutes a clear limitation that must be acknowledged, as the multilingual capabilities of generative AI can vary significantly depending on whether or not they operate within such linguistic boundaries.

Declaration of AI use

During the preparation of this work the author used GPT-4 (OpenAI, 2024) as the main research object to test GPT-4’s multilingual and cognitive capabilities in Norwegian exam and test languages. GPT-4 was also applied to translate the minority language Sami language to English and Norwegian and New Norwegian to English, as well as a validity community for the preliminary findings. After using this GPT-4, the author reviewed and edited the content as needed and takes full responsibility for the content of the publication.

Appendix 1

Example B2, short sample checks of GPT-4 capability to handle the Norwegian minority language Sami and one of the two official written standards of the Norwegian language, New Norwegian. The text used for this example is from this web page: https://www.samas.no/en/node/204

Original in Norwegian

Samisk undervisning - samisk forskning - samisk institusjon. Samisk høgskole ble formelt åpnet 1. november 1989. Og slik ble et ønske som lenge hadde eksistert i det samisk samfunnet oppfylt, nemlig å kunne tilby samisk lærerutdanning, på samisk, og i et samisk miljø. Dette studiet ble raskt etterfulgt av flere andre samiske studier innen høyere utdanning. En egen samisk høgskole gjorde det også mulig å utvikle tilbud som resonerte med behovene i det samiske samfunnet.

Samisk undervisning og samisk forskning er både grunnlaget og premisset for aktivitetene ved Samisk høgskole. Det er grunnen til at det ofte sies at Samisk høgskole er en samisk institusjon. Tilbudene og oppgavene til Samisk høgskole har utviklet seg betydelig siden 1989, og det har gjort at Samisk høgskole har vært med på å styrke det samiske samfunnet på mange måter. Samisk høgskole har stått i bresjen, sammen med andre institusjoner, for utvikling og endringer innen samisk forskning.

Original in Sami

Sámi oahpahus – Sámi dutkan – Sámi ásahus. Sámi allaskuvlla almmolaš rahpan dáhpáhuvai skábmamánu 1. beaivvi 1989. Nu ollašuvai sámiid gaskkas guhkit áiggi sávaldat fállat sámi oahpaheaddjioahpu - ja das maŋŋil eará ge alit dási oahpuid – sámegillii sámegielat birrasis. Sierra sámi allaskuvla attii maiddái vejolašvuođa ovdánahttit fálaldagaid mat buorebut vástidit sámi servvodaga dárbbuide.

Sámi oahpahus ja sámi dutkan lea leamašan sihke vuođđun ja eaktun sámi allaskuvlla doibmii. Danne dadjat dávjá Sámi allaskuvlla leat sámi ásahussan. Vuođđudeami rájes leat Sámi allaskuvlla fálaldagat ja doaimmat mealgat viiddiduvvon. Nu leage Sámi allaskuvla searvan nanusmahttit sámi servodaga. Sámi dutkan lea seamma áigodagas oppalaččat sakka rievdan ja viiddiduvvon ja dan ovdáneamis lea Sámi allaskuvla leamašan guovddážis ovttas eará ásahusaiguin.

Results, Sami to Norwegian:

GPT-4

Samisk utdanning – Samisk forskning – Samiske institusjoner. Den samiske høgskolens offentlige åpning fant sted 1. november 1989. Dermed ble det en langvarig etterspørsel blant samene om å tilby samisk lærerutdanning - og senere også andre høyere utdanninger - på samisk i samiske språkmiljøer. Den samiske høgskolen ga også muligheten til å utvikle tjenester som bedre kunne svare på behovene i det samiske samfunnet.

Samisk utdanning og samisk forskning har vært både grunnlaget og kjernen i den samiske høgskolens virke. Derfor sier man ofte at den samiske høgskolen er en samisk institusjon. Fra opprettelsen har den samiske høgskolens tjenester og aktiviteter stadig blitt utvidet. Slik har den samiske høgskolen bidratt til å styrke det samiske samfunnet. Samisk forskning har samtidig vært i kontinuerlig utvikling og utvidelse, og i denne utviklingen har den samiske høgskolen vært et sentralt samarbeidspunkt sammen med andre institusjoner.

Results, Sami to English

GPT-4

Sami Education – Sami Research – Sami Institutions. The official opening of the Sami University took place on November 1, 1989. Thus, a long-standing demand among the Sami to offer Sami teacher education - and later also other higher education - in Sami, within Sami-speaking environments, was fulfilled. The Sami University also provided the opportunity to develop services that better meet the needs of the Sami community.

Sami education and Sami research have been both the foundation and the core of the Sami University’s operations. Therefore, it is often said that the Sami University is a Sami institution. Since its establishment, the services and activities of the Sami University have continually expanded. In this way, the Sami University has contributed to strengthening the Sami community. Simultaneously, Sami research has been continuously evolving and expanding, and in this development, the Sami University has been a central point of cooperation with other institutions.

Results, Sami to New Norwegian

GPT-4

Samisk utdanning – Samisk forsking – Samiske institusjonar. Den samiske høgskulens offentlege opning fann stad 1. november 1989. Dermed blei ei langvarig etterspurnad blant samane om å tilby samisk lærarutdanning - og seinare også andre høgare utdanningar - på samisk i samiske språkmiljø. Den samiske høgskulen gav også moglegheita til å utvikle tenester som betre kunne svare på behova i det samiske samfunnet.

Samisk utdanning og samisk forsking har vore både grunnlaget og kjernen i den samiske høgskulens verksemd. Derfor seier ein ofte at den samiske høgskulen er ein samisk institusjon. Frå opprettinga har den samiske høgskulens tenester og aktivitetar stadig blitt utvida. På denne måten har den samiske høgskulen bidrege til å styrkje det samiske samfunnet. Samisk forsking har samtidig vore i kontinuerleg utvikling og utviding, og i denne utviklinga har den samiske høgskulen vore eit sentralt samarbeidspunkt saman med andre institusjonar.

Litteraturhenvisninger

Anderson, J. R. (2005). Cognitive Psychology and Its Implications (6th ed.). Worth Publishers.

Agarwal, N., Moehring, A., Rajpurkar, P., & Salz, T. (2023). Combining human expertise with artificial intelligence: Experimental evidence from radiology. MIT Department of Economics Working Paper. Retrieved from https://economics.mit.edu/sites/default/files/2023-07/agarwal-et-al-diagnostic-ai.pdf

Bandura, A. (1986). Social foundations of thought and action: A social cognitive theory. Prentice-Hall.

Bloom, B. S. (1984). The 2 Sigma Problem: The Search for Methods of Group Instruction as Effective as One-to-One Tutoring. Educational Researcher, 13(6), 4-16.

Brasiel, S., Jeong, S., Ames, C., Lawanto, K., Yuan, M. & Martin, T. (2016). Effects of educational technology on mathematics achievement for K-12 students in Utah. Journal of Online Learning Research, 2(3), 205–226.

Brin, D., Sorin, V., Vaid, A., et al. (2023). Comparing ChatGPT and GPT-4 Performance in USMLE Soft Skill Assessments. Sci Rep, 13 (1): 16492.

Brodeur, P.G., Buckley, T.A., Kanjee, Z., Goh, E., Ling, E.B., Jain, P., Cabral, S., Abdulnour, R., Haimovich, A., Freed, J., Olson, A.P., Morgan, D.J., Hom, J., Gallo, R.J., Horvitz, E.,

Bubeck, S., Chandrasekaran, V., Eldan, R., Gehrke, J., Horvitz, E., Kamar, E., Lee, P., Lee, Y. T., Li, Y., Lundberg, S., Nori, H., Palangi, H., Ribeiro, M. T., Zhang, Y. (2023). Sparks of artificial general intelligence: Early experiments with GPT-4. ArXiv.

Carbonell, J. R. (1970). AI in CAI: An artificial-intelligence approach to computer-assisted instruction. IEEE Transactions on Man–Machine Systems, (4), 190–202. https://stacks.stanford.edu/file/druid:xr633ts6369/xr633ts6369.pdf

Carbonell, J. G., Michalski, R. S. & Mitchell, T. M. (Red.). (1983). Machine learning: An artificial intelligence approach. Morgan Kaufmann.

Chollet, F. (2024, December 20). OpenAI’s o3 achieves breakthrough on ARC-AGI benchmark. ARC Prize Blog. https://arcprize.org/blog/oai-o3-pub-breakthrough

Collins, A. M., Gould, L., Passafiume, J. & Carbonell, J. G. (1973). Improving interactive capabilities in computer assisted instruction (Rapport 2631). Bolt Beranek and Newman.

Creswell, J. W., & Guetterman, T. C. (2021). Educational Research: Planning, Conducting and Evaluating Quantitative and Qualitative Research, 6th Edn. Harlow: Pearson.

Deng, R., Jiang, M., Yu, X., Lu, Y., & Liu, S. (2025). Does ChatGPT enhance student learning? A systematic review and meta-analysis of experimental studies. Computers & Education, 227, 105224.

Fang, Y., Ren, Z., Hu, X. & Graesser, A. C. (2019). A meta-analysis of the effectiveness of ALEKS on learning. Educational Psychology, 39(10), 1278–1292. https://doi.org/10.1080/01443410.2018.1495829

Goh E, Gallo R, Hom J, et al. Large Language Model Influence on Diagnostic Reasoning: A Randomized Clinical Trial (2024). JAMA Netw Open. 2024;7(10):e2440969.

Guo, L., Wang, D., Gu, F., Li, Y., Wang, Y. & Zhou, R. (2021). Evolution and trends in intelligent tutoring systems research: A multidisciplinary and scientometric view. Asia Pacific Education Review, 22(3), 441–461.

Hattie, J. & Timperley, H. (2007). The power of feedback. Review of Educational Research, 77(1), 81–112.

Hirunyasiri, P., et al. (2023). Assessment of GPT-4’s Feedback Identification Capabilities Compared to Human Evaluators. Educational Technology Research and Development.

Jin, H.K., Lee, H.E. & Kim, E. (2024). Performance of ChatGPT-3.5 and GPT-4 in national licensing examinations for medicine, pharmacy, dentistry, and nursing: a systematic review and meta-analysis. BMC Medical Education 24, 1013 (2024).

Karthikesalingam and Natarajan (2024). AMIE: A Research AI System for Diagnostic Medical Reasoning and Conversations. Google Research. https://blog.research.google/2024/01/amie-research-ai-system-for-diagnostic_12.html

Kim, H.-E., Kim, H. H., Han, B.-K., Kim, K. H., Han, K., Nam, H., Lee, E. H., & Kim, E.-K. (2020). Changes in cancer detection and false-positive recall in mammography using artificial intelligence: A retrospective, multireader study. The Lancet Digital Health, 2(3), e138–e148.

Krumsvik, R. J. (2024a). Artificial intelligence in nurse education – a new sparring partner? Nordic Journal of Digital Literacy (NJDL), 19(3), 5–23.

Krumsvik R. J. (2025). GPT-4’s capabilities in handling essay-based exams in Norwegian: an intrinsic case study from the early phase of intervention. Front. Educ. 10:1444544.

Kruger, J. & Dunning, D. (1999). Unskilled and unaware of it: How difficulties in recognizing one’s own incompetence lead to inflated self-assessments. Journal of Personality and Social Psychology, 77(6), 1121–1134.

Kulik, J. A., & Fletcher, J. D. (2016). Effectiveness of Intelligent Tutoring Systems: A Meta-Analytic Review. Review of Educational Research, 86(1), 42-78.

Liu M, Okuhara T, Chang X, Shirabe R, Nishiie Y, Okada H, Kiuchi T (2024). Performance of ChatGPT Across Different Versions in Medical Licensing Examinations Worldwide: Systematic Review and Meta-Analysis. J Med Internet Res. 2024 Jul 25;26:e60807. 39052324; PMCID: PMC11310649. https://www.jmir.org/2024/1/e60807/

Ma, W., Adesope, O. O., Nesbit, J. C., & Liu, Q. (2014). Intelligent Tutoring Systems and Learning Outcomes: A Meta-Analysis. Journal of Educational Psychology, 106(4), 901-918.

McDuff, D., Schaekermann, M., Tu, T., Palepu, A., Wang, A., Garrison, J., Singhal, K., Sharma, Y., Azizi, S., Kulkarni, K., Hou, L., Cheng, Y., Liu, Y., Mahdavi, S.S., Prakash, S., Pathak, A., Semturs, C., Patel, S.N., Webster, D.R., Dominowska, E., Gottweis, J., Barral, J., Chou, K., Corrado, G.S., Matias, Y., Sunshine, J., Karthikesalingam, A., & Natarajan, V. (2023). Towards Accurate Differential Diagnosis with Large Language Models. ArXiv, abs/2312.00164. https://arxiv.org/abs/2312.00164

Medium (2022). Does GPT-3 know Swedish? Medium, 27. June. Retrieved 27. March 2023 from: https://medium.com/@emil_svensson/does-gpt-3-know-swedish-3e22bfd7b58f

Moynihan, R., et al. (2015). Preventing overdiagnosis: how to stop harming the healthy. BMJ, 350, h869. https://pubmed.ncbi.nlm.nih.gov/26633255/

Nori, H., King, N., Mckinney, S.C., Carignan, D., Horvitz, E and Microsoft 2 OpenAI. (2023). Capabilities of GPT-4 on Medical Challenge Problems. March 16.

Rajpurkar, P., O’Connell, C., Schechter, A. et al. (2020). CheXaid: deep learning assistance for physician diagnosis of tuberculosis using chest x-rays in patients with HIV. npj Digit. Med. 3, 115 (2020).

OpenAI (2024). Dialogue and testing of GPT-4 from 20. March 2023 – 25. March 2024. OpenAI.

Pardos, Z., & Bhandari, S. (2023). Evaluating the Effectiveness of Hints Provided by GPT-4 in Intelligent Tutoring Systems. Journal of Artificial Intelligence in Education.

Phung, H., et al. (2023). Comparative Study of GPT-4 and ChatGPT in ITS. Computers & Education, 181, 104444.

Pinch, T. J. & Bijker, W. E. (1984). The social construction of facts and artefacts: Or how the sociology of science and the sociology of technology might benefit each other. Social Studies of Science, 14(3), 399–441.

Rosé, C. P. (2015). Jamie Carbonell in memory. Retrieved 20. March 2023 from: https://lti.cs.cmu.edu/sites/default/files/pdf/jgc60/jgc60_Rose_final.pdf

Ray, P. P. (2023). ChatGPT: A comprehensive review on background, applications, key challenges, bias, ethics, limitations and future scope. Internet of Things and Cyber-Physical Systems, 3, 121–154.

Ryan, R. M. & Deci, E. (2020). Intrinsic and extrinsic motivation from a self-determination theory perspective: Definitions, theory, practices, and future directions. Contemporary Educational Psychology, 61(101860).

Sfard, A. (1998). On Two Metaphors for Learning and the Dangers of Choosing Just One. Educational Researcher, 27(2), 4–13.

Stake, R. E. (1995). The Art of Case Study Research. Thousand Oaks, CA: Sage.

Stake, R. E. (2006). Multi Case Study Analysis. New York, NY: The Guilford Press.

Smith, K. & Krumsvik, R. (2007). Video papers – a means for documenting practitioners’ reflections on practical axperiences: The story of two teacher educators. Research in Comparative and International Education, 2(4), 272–282.

Steenbergen-Hu, S., & Cooper, H. (2014). A Meta-Analysis of the Effectiveness of Intelligent Tutoring Systems. Journal of Educational Psychology, 105(2), 415-429.

Tlili, A., Saqer, K., Salha, S., & Huang, R. (2025). Investigating the effect of artificial intelligence in education (AIEd) on learning achievement: A meta-analysis and research synthesis. Information Development, 0(0).

VanLehn, K. (2011). The Relative Effectiveness of Human Tutoring, Intelligent Tutoring Systems, and Other Tutoring Systems. Educational Psychologist, 46(4), 197-221.

VanLehn, K., Lynch, C., Schulze, K., Shapiro, J. A., Shelby, R., Taylor, L., Treacy, D., Weinstein, A. & Wintersgill, M. (2005). The Andes physics tutoring system: Lessons learned. International Journal of Artificial Intelligence in Education, 15(3), 147–204.

Vygotsky, L. S. (1978). Mind in society: The development of higher psychological processes. Harvard University Press

Wenger, E. (1987). Artificial intelligence and tutoring systems: Computational and cognitive approaches to the communication of knowledge. Morgan Kaufmann Publishers

Wittgenstein, L. (1997). Filosofiske undersøkelser (M. B. Tin, Overs.). Pax forlag.

Wood, D., Bruner, J. S., & Ross, G. (1976). The Role of Tutoring in Problem Solving. Journal of Child Psychology and Psychiatry, 17(2), 89-100.