Peer feedback med videoannotering för att främja lärarstudenters reflektion

Peer feedback är ett centralt element av bedömning för lärande, och användning av video och videoannotering har potential att främja lärarstudenters kritiska reflektioner kring deras undervisning.

Peer feedback är ett centralt element av bedömning för lärande, och användning av video och videoannotering har potential att främja lärarstudenters kritiska reflektioner kring deras undervisning. I den här designstudien undersökte vi feedback som förstaårsstudenter gav med videoannoteringsverktyget Studio på sina medstudenters filmade muntliga presentationer och visar hur verktyget påverkade feedbackprocessen. Data består av den skrivna feedback som studenterna skapade med videoannoteringsverktyget, studenternas svar på en undersökning och två semistrukturerade fokusgruppintervjuer. Vi använde kvalitativa metoder för dataanalysen. Resultaten visar att mer än hälften av alla kommentarer var positiva och bekräftande (beröm) och bara ett fåtal erbjöd råd för förbättring. Studenterna tyckte att feedbacken var detaljerad, att videoannoteringsverktyget gjorde det möjligt att kommentera vid konkreta ögonblick i presentationen och drog slutsatsen att processen med att både ge och få denna typ av feedback ökade deras förmåga till självreflektion. Även om studenterna uttryckte oro över att ge feedback till medstudenter, så var de positiva till att använda videoannotering för att ge respons och beskrev att processen bidrog till deras lärande och var relevant för deras framtida verksamhet som lärare. Resultaten visar att det är viktigt att lärarutbildare är medvetna om potentialen och begränsningarna med videoannoteringsverktyg för att förbättra pedagogiska processer och studenters reflektion kring deras eget sätt att undervisa. Resultaten pekar också på att studenternas färdigheter i att ge feedback av hög kvalitet behöver utvecklas mer.

Peer Feedback with Video Annotation to Promote Student Teachers’ Reflections

Peer feedback is a central element of assessment for learning, and the use of videos and video annotation may enhance student teachers’ critical reflections on their teaching practices.

Peer feedback is a central element of assessment for learning, and the use of videos and video annotation may enhance student teachers’ critical reflections on their teaching practices. In this design-based research study we examined feedback provided by first-year student teachers on video recordings of their peers’ oral presentations using the video annotation tool Studio, and showed how Studio contributed to the process of providing feedback. Data consists of the students’ written feedback provided via the video annotation tool, the students’ responses to a survey and two semi-structured focus group interviews. We used qualitative methods to analyse the data. We found that more than half of all feedback comments were affirmative (praise), with only a few offering advice for improvements. The students found the feedback to be concise, indicated that Studio allowed them to attach feedback to concrete moments in presentations, and they concluded that providing feedback on their peers’ video recorded oral presentations, and receiving such feedback, enhanced their capacity for self-reflection. Even though they expressed concerns about providing feedback to their peers, the students expressed a positive attitude towards the learning design of providing feedback with video annotation and described it as useful and relevant for their future as teachers. These findings emphasise the importance of teacher educators’ awareness of the benefits and limitations of video annotation tools for enhancing pedagogy and students’ reflections on their own teaching practices. The findings also indicate that student teachers’ skills for providing high-quality feedback to their peers need to be improved.

Introduction

Reflections on teaching practices and assessment for learning (AfL) are important for fostering teacher professionalism in teacher education (Schön, 1983, 1987). Peer feedback is a central element of AfL (Black & Wiliam, 1998; Kluger & DeNisi, 1996), and the use of videos and video annotation may enhance student teachers’ critical reflections on their teaching practices (Calandra & Rich, 2015; Coffey, 2014; Colasante, 2011; Gaudin & Chaliès, 2015; Hamel & Viau-Guay, 2019; Körkkö et al., 2019; van Es et al., 2017; Zhang et al., 2011). In 2006, Butler et al. suggested that educators should use video annotation to provide formative feedback on transient events, such as professional training or oral presentations, because video recordings and annotation anchor comments to specific parts of a practical performance. Such comments attached to specific parts of a performance can be compared to comments in the margins of a written text, which are said to be more effective than general comments (Black & Wiliam, 1998; Hattie & Timperley, 2007; Igland & Østrem, 2019).

Research that examines the use of video annotation in teacher education is scarce (Pérez-Torregrosa et al., 2017; Tülüce, 2018), and scholars have paid little attention to the actual content of the formative feedback that student teachers provide to their peers (Harris et al., 2015; Van Den Berg et al., 2006). To the best of our knowledge, minimal research has examined the specific use of video annotation tools for formative assessment and peer feedback in teacher education; also Tülüce (2018) pointed out the need for more research on this topic. Our design-based research (DBR) study (Anderson & Shattuck, 2012) addressed this gap by examining the types of feedback that first-year student teachers provide via the video annotation tool Studio on their peers’ video recorded oral presentations; our aim was to gain insights into student teachers’ experiences with using Studio to provide feedback. We posed the following research questions (RQs) to guide our study:

RQ1: What types of feedback did first-year student teachers provide via the video annotation tool Studio on their peers’ video recorded oral presentations?

RQ2: How did student teachers experience the use of the video annotation tool Studio when providing feedback on their peers’ video recorded oral presentations?

Theoretical perspective and relevant research

The role of formative assessment and peer feedback in teacher education

Formative assessment and peer feedback are important elements of AfL that can empower student teachers to reflect on their own and others’ performances and activate the students as pedagogical resources for one another (Black & Wiliam, 1998; Crooks, 1988; Kluger & DeNisi, 1996; Natriello, 1987).

Hattie and Timperley (2007) defined feedback as “information provided by an agent (e.g., teacher, peer, book, parent, self, experience) regarding aspects of one’s performance or understanding” (p. 81). Several studies have addressed the impact of feedback on the learning process (Hattie, 2009; Hattie & Timperley, 2007; Kluger & DeNisi, 1996; Kulik & Kulik, 1988). Researchers (Hattie & Timperley, 2007; Higgins et al., 2002) have indicated that feedback may be a powerful tool for enhancing students’ learning if the feedback is constructive and offers cues about how to engage with and complete the task at hand. Hattie and Timperley suggested that, to be effective, feedback must answer three major questions:

Where am I going? (What are the goals?), How am I going? (What progress is being made toward the goal?), and Where to next? (What activities need to be undertaken to make better progress?). (Hattie & Timperley, 2007, p. 86)

These questions correspond to the notions of feed up, feed back and feed forward, which are crucial for student learning (Black & Wiliam, 1998; Hattie & Timperley, 2007; Sadler, 1998). To be perceived as effective, feedback should be formative, and students should be given opportunities to use it to revise their work (Ferguson, 2011; Sadler, 1998). Feedback should be not only accessible, clear and comprehensible to the receiver (Black & Wiliam, 1998; Ferguson, 2011; Sadler, 1998), but also, detailed rather than general (Igland, 2008), timely and personalised (Ferguson, 2011; Higgins et al., 2002; Li & De Luca, 2014). According to Hattie and Timperley (2007), feedback is less useful if it contains praise, rewards or punishment. Furthermore, a positive relationship between teacher and student is important for the student to perceive feedback from the teacher as effective (Pokorny & Pickford, 2010). In the Norwegian context, when analysing the quality of feedback given by teachers in lower secondary classrooms, Gamlem and Munthe (2014) found that teachers were focusing on establishing a positive and friendly atmosphere and provided encouraging and affirming rather than high-quality constructive and learning oriented feedback, which indicates that improvements to teachers’ feedback-giving skills are needed, which can be addressed within teacher education programmes.

In addition to offering advice for improvements, peer feedback can promote reflection and enhance learning by allowing student teachers to experience playing the role of both assessor and assessee (Pope, 2001; Topping, 2009; Topping et al., 2000). As assessors, students analyse the strengths and weaknesses of their peers’ work and offer feedback based on that analysis, while as assessees, the students receive feedback that they can use to improve their own work (Li et al., 2008). When providing feedback, students learn and may enhance their own reflection process (Cho & MacArthur, 2011; Cho & Cho, 2011). Students engage in several evaluation steps, first by assessing their peers’ work and then by comparing and contrasting it with their own work, thus benefiting from the overall evaluation process. During such evaluative work, students may develop a deeper understanding of the learning process and apply assessment criteria to evaluate their own learning outcomes (Nicol et al., 2014; Topping, 1998). Assessees often describe the feedback received from peers as more understandable and helpful than teacher feedback because peer feedback is written in more accessible language (Falchikov, 1995; Topping, 1998). Topping (2009) stated that “performance as a peer assessor is likely to improve with practice” (p. 63), as assessors and assessees develop their professional skills. By engaging in peer assessment, students may develop their understanding of the assessor and assessee roles and activate their peers as pedagogical resources for one another (Topping, 2009).

To summarise, teachers must develop the ability to provide constructive and high-quality feedback when evaluating students’ work and offering advice for improvements. By experiencing both the assessor and assessee roles, student teachers may improve their understanding of providing feedback as a method of AfL and how to implement it in educational practice (Hattie & Timperley, 2007; Li et al., 2010; Nicol et al., 2014; Topping, 1998).

Using video and video annotation in teacher education

In teacher education, video recordings of demonstrations and practice performances allow students to observe and reflect on their professional practices (Calandra & Rich, 2015; Coffey, 2014; Colasante, 2011; Gaudin & Chaliès, 2015; Hamel & Viau-Guay, 2019; Körkkö et al., 2019; van Es et al., 2017; Zhang et al., 2011).

Videos can be viewed and analysed from multiple perspectives, allowing teachers to gain a deeper understanding of their teaching practices (Santagata et al., 2007; van Es, 2009). Scholars contend that students improve their skills by observing and analysing classroom practices and that students’ interpretations and reflections are more specific when they study video cases (Stockero, 2008). Moreover, students pay close attention to details and specific events in classrooms when using video material (van Es & Sherin, 2002). Researchers (Seidel et al., 2011; Zhang et al., 2011) have shown that teachers participating in professional development courses find videos of teaching practices useful for enhancing their reflections.

However, researchers have emphasised that to support learning and unlock the full potential of videos, student teachers’ interactions with videos should be guided and structured (Brouwer & Robijns, 2014; Krammer et al., 2006; Santagata & Angelici, 2010; Seidel et al., 2013; van Es & Sherin, 2002; van Es et al., 2017). Few studies have examined the use of video annotation in teacher education (Pérez-Torregrosa et al., 2017). Rich and Hannafin (2009) suggested that video annotation tools can augment and extend teachers’ reflections by structuring video materials and facilitating effective interpretation. Therefore, in combination with appropriate frameworks for guiding interpretation (such as assessment rubrics), video annotation tools can direct and empower analyses of video recorded content.

Studies on the use of video annotation focus on different topics, such as promoting information and communication technology competencies (Anderson et al., 2012), annotating video-based portfolios (Alley & King, 2015; Cebrián de la Serna et al., 2015), collaborating on performance analysis projects (Brooks, 2012; Luo & Pang, 2010) and criteria for choosing a video annotation tool (Rich & Trip, 2011). Several studies have focused on the use of video annotation for promoting reflections on teaching practice (Colasante, 2011; Ellis et al., 2015; McFadden et al., 2014; Rich & Hannafin, 2009; Roehrig et al., 2015). Chieu et al. (2015) found that by engaging in discussions about animated classroom activities via video annotation, teachers improved their capacity to reflect on and offer solutions to various classroom situations. Some researchers (Ellis et al., 2015; McFadden et al., 2014) conducted studies that involved newly qualified teachers commenting on video recordings of their peers’ practices. The researchers found that the newly qualified teachers who did not receive proper guidance and support from their instructor provided their peers with mostly descriptive and explanatory feedback. Additionally, the newly qualified teachers often simply praised and approved their peers’ practices rather than offering suggestions or advice for improvement. Ellis et al. (2015) indicated that video annotation tools can support reflective commenting; however, newly qualified teachers need additional support to develop their reflection capacities and their ability to provide constructive feedback. Tülüce (2018) examined the affordances and constraints of a video annotation tool for commenting on video recorded micro-teaching units in a peer-based feedback process. The findings showed that the use of video annotation contributed to student teachers’ learning by (a) structuring self and peer assessments, (b) improving the quality of the feedback, (c) identifying student teachers’ weaknesses and strengths, and (d) promoting reflections.

To summarise, giving student teachers the opportunity to analyse audiovisual recordings of teaching practices can enhance their reflections if such analyses are guided and structured. Video annotation can contribute to guiding and structuring student teachers’ analyses of video content, which enhances their professional reflections and improves their ability to provide constructive, high-quality feedback.

Method

Participants and setting

Two teacher education models exist in Norway. The consecutive model focuses on pedagogy and didactics for students who have completed the disciplinary studies in their subjects and have decided to become teachers. The concurrent model integrates disciplinary and pedagogical studies, which are taught at the same time. In this study, we collected data from a five-year teacher education master’s programme based on the concurrent model. The programme prepares student teachers to teach grades 1–7 or 5–10. Several Norwegian teacher education institutions participated in the project, funded by the Norwegian Ministry for Education and Research and led by the Centre for Professional Learning in Teacher Education (ProTed). The project goal was to improve the learning process during the first year of teacher education. All first-year student teachers (N = 104) at the Østfold University College participated in the STIL 2.0 sub-project, which examined students’ collaborative learning with digital technology (ProTed, 2019). The STIL 2.0 sub-project included a DBR study (Anderson & Shattuck, 2012) that aimed to investigate how the video annotation tool Studio could support student teachers in their feedback-giving process. The study was designed by a group of three teacher educators from different subject areas in teaching and learning: Norwegian, drama, and information and communication technology (ICT). Table 1 presents the steps of the sub-project and the learning opportunities provided to the student teachers.

Table 1. Steps of the STIL 2.0 sub-project

|

Week |

Step |

|

Week 1 |

Seminar on presentation techniques with a focus on visualisation via digital tools, such as PowerPoint and Prezi, and design principles (Mayer, 2020). |

|

Week 2 |

Lecture on the observation method and discussions with subject teachers on what to observe; students designed their own observation template form in pairs. |

|

Week 3 |

Students observed an experienced teacher in the classroom during their practical training. |

|

Week 4 |

Seminar on oral presentation techniques (Penne & Hertzberg, 2020). Introduction and discussion of an assessment rubric for providing constructive feedback on video recordings of teaching practices. Discussion about how to provide constructive feedback based on Hattie and Timperley’s (2007) research and students’ previous experiences. |

|

Week 5 |

Students discussed their observations in pairs and prepared their oral presentations. |

|

Week 6 |

(Start of data collection.) Student teachers’ seven-minute oral presentations were video recorded and uploaded on the Canvas learning management system (LMS); the peer review feature in Canvas LMS was used to assign presentations to specific student groups. Students provided feedback on their peers’ video recorded oral presentations using the annotation tool Studio in Canvas. The students had not used Studio before and were introduced to the functions and features via a three-minute video tutorial. |

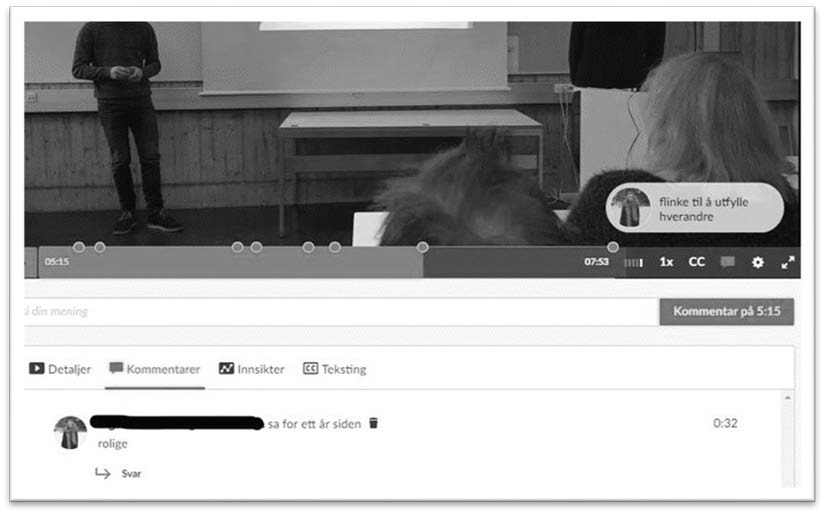

Studio, the video annotation tool in Canvas

Studio is a video annotation tool integrated in the Canvas learning management system (LMS). Studio allows instructors and students to actively engage in learning by attaching comments to certain points in a video’s timeline. When the video is watched again, the comments pop up in the parts of the video to which they were anchored. Figure 1 provides a screenshot of Studio.

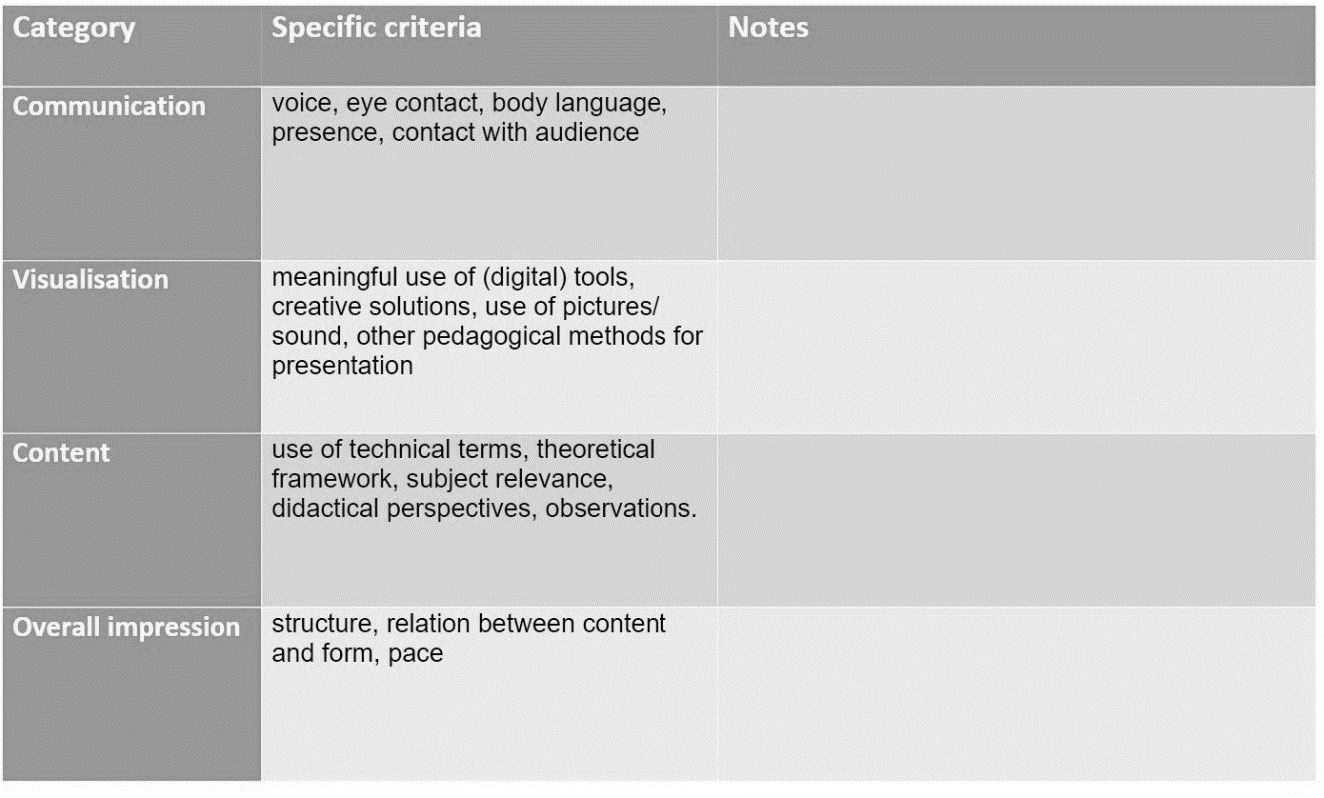

Assessment rubric for the oral presentations

The project team designed an assessment rubric to be used by the student teachers to assess their peers’ oral presentations. The assessment rubric involved elements of what the students had studied during the sessions prior to data collection (Table 1). However, the rubric did not contain instructions on how to formulate feedback or on how to offer advice for improvements. The project team assumed that the students had some experience in providing feedback because AfL has been a priority area in the Norwegian education sector for over a decade (Utdanningsdirektoratet, 2019). Figure 2 shows the assessment rubric used in the project.

Data and analysis

We used qualitative methods (Creswell & Poth, 2018) to analyse the data and to find evidence on student teachers’ experiences with using the video annotation tool Studio to provide feedback on their peers’ video recorded oral presentations. To address RQ1 (What types of feedback did first-year student teachers provide via the video annotation tool Studio on their peers’ video recorded oral presentations?), we examined the written feedback (a total of 234 comments) provided by the 18 pairs of students who had agreed to participate in the research project by signing the informed consent form. We used thematic analysis to investigate the students’ comments, and inspired by Clarke and Braun (2014), we conducted the analysis through the following steps. First, we familiarised ourselves with the data and imported the data into NVivo 12 for further analysis. Second, we coded the data by following an inductive approach (Castleberry & Nolen, 2018). To name the codes, we used descriptive labels that directly described or were taken from the students’ feedback. For example, if the students commented that a peer was difficult to understand and should speak up, we labelled the comment use of voice. Third, we grouped the codes according to context to create themes. Clarke and Braun (2014) defined themes as patterns among codes – that is, themes connect related codes to reveal a clearer picture of the phenomenon. Finally, we compared the emergent themes with the descriptors in the assessment rubric to understand how students’ feedback coincided with the assessment rubric. We describe the themes and their relation to the assessment rubric in the presentation of the findings.

To address RQ2 (How did student teachers experience the use of the video annotation tool Studio when providing feedback on their peers’ video recorded oral presentations?), we digitally administered a survey to all students who participated in the STIL 2.0 sub-project (N = 104). The students completed the survey on the same day that they recorded their presentations and provided and received feedback via the video annotation tool Studio. The response rate of 96% (N = 100) is considered valid (Nulty, 2008). The survey consisted of two closed questions concerning the students’ study background (study programme, main subjects) and six closed questions that allowed free-text comments in the responses regarding various aspects of the project, such as collaboration, students’ engagement and the feedback-giving process. Responses to two of these questions were selected for further analysis: What effect did the following have on your learning process? (a) Providing feedback on peers’ video recorded oral presentations; (b) Studio as a tool for providing feedback. The questions, which were meant to elicit students’ experiences with Studio, provided participants with response options based on a 4-point Likert scale (no effect, little effect, some effect or great effect). Our analysis of the students’ responses to these questions is described in the following paragraphs.

To understand students’ experiences with using Studio, 36 free-text comments were analysed thematically in NVivo 12 by following the steps outlined previously and by employing an inductive coding approach (Castleberry & Nolen, 2018). Two major themes were identified: design of the learning process and use of the video annotation tool Studio. These two themes were explored further in two 45-minute, semi-structured focus group interviews (Kvale et al., 2017; Wibeck, 2010) involving 10 students (5 in each group) who were randomly chosen from among the participants. The interviews focused on the feedbackgiving process and other aspects of the STIL 2.0 sub-project, such as students’ collaboration and engagement. We asked the following questions (connected to the previously identified themes of design of the learning process and use of the video annotation tool Studio) during the interviews to examine students’ experiences with using Studio: How did you experience giving and receiving feedback on a video recorded presentation? What do you think of Studio as a tool for providing feedback? The focus group interviews were recorded and transcribed by the researchers. Interview transcripts were imported into NVivo 12 and analysed together with the free-text comments from the survey. We used the same codes when analysing the survey responses and the interview transcripts. The codes and the emergent themes are presented in Table 2 and in the findings section. Both authors analysed the data together to ensure research validity. All data was originally in Norwegian and was translated into English by the research team.

The research project was approved by the Norwegian Centre for Research Data (NSD); the anonymised data was processed in compliance with the General Data Protection Regulation (GDPR). All names used are pseudonyms.

Findings

Analysis of the types of feedback that the student teachers provided via the video annotation tool Studio (RQ1)

The thematic analysis (Castleberry & Nolen, 2018; Clarke & Braun, 2014) identified several themes that reflected the types of feedback that the student teachers provided via the video annotation tool Studio. Table 2 presents the themes, the codes, the percentage of total comments that reflect each code and, in the third and fourth columns, if/how each code relates to the assessment rubric feedback criteria.

The data highlights the differences in the number of times the various codes were addressed and, correspondingly, in how frequently the multiple types of feedback were addressed in the feedback provided by the student teachers on their peers’ video recorded oral presentations. Some of the comments matched thematically and, thus, were coded against several themes. Some codes were related to the assessment rubric, while others were not.

Table 2. The themes, codes, percentages of comments and correspondence to the assessment rubric feedback criteria

|

Themes related to types of feedback (Percentage of theme-related comments out of total number of comments, N=234) |

Codes (Percentage of comments out of total number of comments) |

Relation to assessment rubric feedback criteria |

Description of criteria (from assessment rubric; see Figure 2) |

|

Communication (60.7%) |

Use of voice (12.0%) |

Communication |

Voice, eye contact, body language, presence, contact with audience |

|

|

Gestures and body language (18.8%) |

Communication |

|

|

|

Presentation style (23.9%) |

Communication |

|

|

|

Physical interaction of presenters (6.0%) |

Not related |

|

|

Content (33.3%) |

Content of presentation (33.3%) |

Content |

Use of technical terms, theoretical framework, subject relevance, didactical perspectives, observations |

|

Visualisation (7.3%) |

Visualisation (7.3%) |

Resources and methods for visualisation |

Meaningful use of (digital) tools, creative solutions, use of pictures/sound, other pedagogical methods for presentation |

|

Overall impression (2.6%) |

Overall impression (2.6%) |

Overall impression |

Structure, relation between content and form, pace |

|

Affirmation (52.6%) |

Affirmation (52.6%) |

Not related |

|

|

Advice for improvement (12.0%) |

Advice for improvement (12.0%) |

Not related |

|

The codes use of voice (12.0%), gestures and body language (18.8%), presentation style (23.9%), and physical interactions of presenters (6.0%) exemplify ways of communicating with the audience or within the group of presenters. Except physical interactions of presenters, the codes were also clearly related to the assessment criterion communication. Therefore, we grouped these codes into the theme communication (60.7%). The code content of presentation was related to the assessment criterion content; therefore, we created the theme content (33.3%). Only a small number of student teachers provided feedback related to visualisation (7.3%), and even fewer on overall impression (2.3%). However, these codes can also be associated with corresponding assessment criteria and we therefore created the themes visualisation (7.3%) and overall impression (2.3%). We also found two codes that did not relate to the criteria in the assessment rubric; the code affirmation (52.6%) and the code advice for improvement (12.0%). We created corresponding themes to reflect this type of feedback provided by the students. In the following sections, we examine these types of feedback in detail and provide representative quotations from students’ feedback to illustrate the identified themes.

Communication (60.7%)

Much of the students’ feedback focused on the presenters’ use of voice, gestures and body language and the presentation style. When commenting on the use of voice while presenting, the students appreciated the presenters who spoke clearly, loudly and at an appropriate tempo and advised their peers who spoke quietly to speak up; for instance, one student commented: “Speaks loudly and clearly. Good use of voice.” Concerning gestures and body language, the students acknowledged when their peers expressed engagement via gestures and when they addressed the audience while maintaining eye contact. The students approved of the presenters who talked confidently, calmly and naturally, without simply reading their papers in front of the class. For example, a student wrote: “You interact well with the PowerPoint […], but you read more than you talk.” This type of feedback corresponded to the criterion communication in the assessment rubric. Several students also provided feedback related to the presenters’ physical interactions (6.0%), a category that was not included in the assessment rubric, but nevertheless relates to communication. For instance, a student commented: “It was good that Kate looked at Mary when she was presenting.”

Content (33.3%)

The students commented in detail about the content of the presentations and evaluated them by either confirming the students’ actions or by asking relevant questions. The students pointed out that their peers used good examples or had interesting reflections. For example, a student commented: “What did the teacher do to adapt her teaching to the students? You mentioned that the teacher did so; however, you did not explain how.”

Visualisation (7.3%)

Several comments were related to the criterion resources and methods of visualisation in the assessment rubric. For instance, a student wrote: “Too much text in your PowerPoint; you could have included more pictures instead.” The students emphasised the importance of the oral presentation matching the content of the PowerPoint slides, the use of good and relevant pictures and the adequate visualisation of the ideas presented.

Overall impression (2.6%)

Very few students commented on their overall impression of the presentation. The ones that did, commented for example: “All presenters were very engaged, which contributed to a very good presentation. Well done!” Although the criterion overall impression was included in the assessment rubric, only a few students summarised their overall impressions about their peers’ video recorded oral presentations. This may indicate that the students either had not considered this criterion important or that video annotation might not be suitable for overall comments because the students focused on commenting on specific points in the video recorded oral presentations.

Affirmation (52.6%)

The students praised and affirmed their peers’ actions by using words such as good, great and nice in their comments, for example: “Good reflection. Nice body language.” The student teachers praised their peers’ organisation and style, communication skills, presentation content and visualisation. By expressing approval, the students confirmed their peers’ actions, potentially boosting their peers’ confidence and motivation. The affirmation criterion was not included in the assessment rubric. The large number of affirming comments may indicate that the learners had been previously advised to provide positive feedback.

Advice for improvement (12.0%)

Although advice for improvement was not included as a criterion in the assessment rubric, some students provided constructive feedback regarding possible improvements. They offered advice on various aspects, such as presentation style, structure and content. For instance, a student wrote:

You had too much text in the PowerPoint. This is relevant information; however, it is difficult to go through all this information in your presentation. You can either reduce the content or perhaps include an illustration to visualise it.

By providing this kind of feedback, the students demonstrated their awareness of the AfL principles and approaches to formative assessment, which they had been introduced to during the seminar on feedback techniques and criteria for high-quality feedback four weeks prior to the presentations (see Table 1).

To summarise the analysis, the types of feedback that the students provided were highly related to the assessment rubric and mostly addressed communication and content. Overall, 52.6% of the comments were affirmative, while only 12% offered advice for improvement.

Analysis of students’ experiences with using the video annotation tool Studio (RQ2)

When asked to what degree they found that the use of video recordings and video annotation had affected their learning process, the students answered as shown in Table 3.

Table 3. Students’ answers regarding the effects of Studio on their learning

|

What effect did the following have on your learning process? |

No effect |

Little effect |

Some effect |

Great effect |

|

Providing feedback on peers’ video recorded oral presentations |

12% |

20% |

39% |

29% |

|

Studio as a tool for providing feedback |

11% |

22% |

43% |

24% |

Note. N=104

68% of the students reported that providing feedback on a peer’s video recorded oral presentation had a positive effect on their learning process, and 67% indicated that Studio as a tool had some or great effect. The analysis of 36 students’ comments revealed several themes that reflected the students’ experiences with using Studio. Table 4 presents the codes and the emergent themes.

Table 4. The codes and themes regarding students’ experience with using Studio

|

Themes |

Codes |

|

Design of the learning process |

Peer feedback |

|

|

Contribution to learning |

|

|

Positive attitude |

|

|

Project organisation |

|

|

Use in the future |

|

|

Not useful |

|

Use of the video annotation tool Studio |

Affordances of video Affordances of Studio |

The codes that addressed the topics related to the design and outcome of the peer-feedback process were grouped under the theme design of the learning process. Student comments addressed the peer feedback process and its contribution to learning, reflected a positive attitude, discussed project organisation and the use of Studio in the future or described the process as not useful.

The codes affordances of video and affordances of Studio overlapped considerably; usually when the students commented on how video annotation contributed to the feedback or the learning process, they also referred to the advantages of using the videos. As using video is a prerequisite for carrying out video annotation, we combined the codes into the theme use of the video annotation tool Studio. In the following section, we examine the themes in detail.

Design of the learning process

When commenting on the use of video annotation, the students expressed their thoughts about the learning design of the peer-feedback process. Some students found it difficult to provide feedback – they did not feel comfortable commenting on their peers’ presentations and were afraid of being too critical, as illustrated by the following answer from the interviews: “I know many talked about it, that it was horrid to assess others because you don’t know how they take it” (Interview 1, Marc). The students’ remarks may indicate that many of them felt insecure in the role of assessor. However, other students appreciated receiving feedback from their peers, and the students stated repeatedly that video annotation made it easy to provide and receive feedback.

The students also stated that the feedback process via video annotation contributed to their learning and was useful. For example, a student pointed out:

You became so much more aware of what you were doing. And to see yourself talking, you see yourself from the perspective of the ones who see you. And to get comments on what you do well and what you do badly, that was unbelievably constructive for me. I felt I learnt a lot from that. (Interview 2, Anna)

The students explained that being able to see their own teaching practices in action combined with their peers’ comments promoted their learning and enhanced their own reflection process.

Several students indicated having a positive attitude towards the design of the learning process and expressed, for instance: “It was really great to see what we had to improve […], and it was easy to provide feedback” (Interview 2, Charly). The students appreciated Studio and the work style and emphasised that they found both very useful.

Referring to the organisation of the project, one student mentioned that it might have been helpful if they had been introduced to the video annotation tool earlier in the process. Students also mentioned too many breaks were scheduled in the and commented: “A bit too much wasted time ‒ otherwise a nice day” (Survey comment).

The students indicated that being able to provide quality feedback and becoming familiar with possible tools was relevant for their future. In the interviews they explained:

I also think it was great to give them [the peers] feedback and so on. We are all going to be teachers and will give feedback to our pupils, and to practice giving them the ‘right’ critique or praise is really important. (Interview 2, Mary)

Two survey comments claimed that the peer-feedback process was not useful and that the time could have been spent on more important things, for example: “I didn’t get anything out of the project and think this was time we could have used for more important things, like studying for the exams” (Survey comment).

Use of the video annotation tool Studio

As already mentioned, the codes affordances of video and affordances of Studio overlapped considerably; therefore, they are presented together. The students stated repeatedly that the comments they received were more precise than what they were used to and emphasised that they experienced the possibility of inserting comments exactly where needed as positive and easy. For example, a student pointed out:

I think this was a good way to give feedback. We could insert some comments into the video, which you could see when you got the video back and see exactly where the things happened, instead of a more general comment in the end. (Interview 2, Chris)

While some students mentioned feeling uncomfortable while being recorded, the students always pointed out that it was helpful to watch their peers’ presentations again before providing feedback. Moreover, seeing their own actions on video in combination with peer comments improved their capacity to evaluate themselves and reflect upon their actions while presenting (e.g., talking fast, playing with hair, keeping hands in pockets); for instance, a student stated:

An unusual way to get feedback. Additionally, it was a bit disgusting to see yourself on video; however, you get to see what you do right or wrong, and the feedback you get is attached to the exact moment in the presentation. (Survey comment)

To summarise, the students explained that Studio assisted them in the feedback-giving process, as the comments were precise and anchored to certain points in the presentations, and they expressed some concerns about providing feedback to their peers and being recorded. However, the students concluded that the feedback-giving process via the video annotation tool Studio enhanced their capacity for self-reflection. The students described the learning design as useful, contributing to their learning and relevant for their future teaching practice. Only very few comments suggested changes in the process or considered the experience useless.

Discussion

In this section, we discuss the findings in relation to (a) the types of feedback that the student teachers provided on their peers’ video recorded oral presentations using the video annotation tool Studio, and (b) the student teachers’ experiences with the feedback-giving process via Studio. Ours is a DBR study, and as Anderson and Shattuck (2012) pointed out, “design-based interventions are rarely if ever designed and implemented perfectly; there is always room for improvements in the design” (p. 17). Therefore, in line with this perspective, we also address the improvements that can be made when conducting a feedback-giving process via Studio in the future.

The analysis of the types of feedback that the student teachers provided on their peers’ video recorded oral presentations revealed that the learners commented mostly on communication (the use of voice, gestures and body language, as well as learners’ enthusiasm). In addition, the students provided positive feedback (affirmation) by praising organisation and style, presentation content and visualisation. While the students also provided feedback about the content of presentations, visualisation, and their overall impression, very little feedback involved advice for improvement. The analyses of the data showed that the students paid attention to the assessment rubric and addressed the criteria in the rubric. However, the students’ feedback mostly concerned aspects of communication and the content of their peers’ oral presentations. Except for overall impression, all types of feedback were detailed and mainly addressed specific and contextual aspects of the video recorded presentations; for example, the students suggested that the presenters should take their hands out of their pockets or include less text on the PowerPoint slides. However, as the feedback was attached to specific moments in the students’ oral presentations, the students may have been challenged when trying to arrive at an overview of the whole presentation and provide constructive feedback corresponding to the notions of feed up, feed back and feed forward (Hattie & Timperly 2007). This may explain why so few comments addressed the criterion of overall impression. These findings coincide with the findings from previous studies that have indicated that interpretations and reflections are more specific when students watch videos (Stockero, 2008) because they pay more attention to details and specific events (van Es & Sherin, 2002). The fact that some students provided feedback concerning advice for further improvement may indicate that the students were aware of the principles of AfL and formative assessment (e.g., feedback concerning “Where to next?”). However, only a small number of the first-year students provided such feedback, potentially indicating that they did not have much experience in doing so and that the principles of AfL require particular attention and should be reinforced.

The responses that the student teachers provided about their experiences, concerned the design of the learning process and the use of the video annotation tool Studio. Several students expressed a positive attitude towards the learning design and emphasised that precise feedback and video recordings improved their reflections and learning. The student teachers confirmed the educational value of the learning process they had engaged in by describing it as an informative process that contributed to learning and fostered the development of fundamentally important skills for the teaching profession. However, the students also expressed anxiety about commenting on their peers’ performance. Moreover, the students provided feedback on the use of the video annotation tool Studio by pointing out its benefits: (a) videos and Studio enabled visualisation of students’ performance while giving an oral presentation, (b) Studio allowed feedback to be attached to concrete moments in a presentation, and (c) the feedback provided to peers was precise and contextual. These findings corroborate the findings from previous studies that students can enhance their reflections by using video annotation tools in the feedback-giving process (Rich & Hannafin, 2009; Tülüce, 2018). The students emphasised the value of precise feedback and of videos that demonstrate educational practices in enhancing their reflections and learning.

The findings concerning the student teachers’ experiences may also help to explain the types of feedback they provided on their peers’ oral presentations. As mentioned previously, little advice for improvement was included in the feedback; instead, the students expressed much praise and affirmation, focusing on positive feedback and encouragement. These findings also coincide with the results from previous studies on the use of video annotation tools for feedback in teacher education. Student teachers often simply praise and approve their peers’ practices rather than offering suggestions or advice for improvement (Ellis et al., 2015; McFadden et al., 2014). The fact that the student teachers provided primarily affirmative feedback may be related to the discomfort they experience when offering feedback. As Topping (2009) stated, peer feedback fosters student teachers’ professionalism, but in the beginning, assessors may experience anxiety about providing feedback to their peers and may offer mostly positive feedback. According to Topping (2009), giving positive feedback and affirming the assessees is a way to overcome their anxiety. The students’ uncertainty about providing feedback may explain the findings of the present study, as only a few comments coincided with what has been described as high-quality or effective feedback (Hattie & Timperley (2007). As noted previously, these findings may indicate that the AfL principles require attention and should be reinforced in the education of student teachers.

Conclusion and implications

Our findings have several pedagogical implications regarding the use of the annotation tool Studio for improving the AfL practices of student teachers.

First, Studio may affect the type of feedback that the students provide; more generally, it may impact students’ reflections and learning. The student teachers made use of the assessment rubric and provided concise feedback. However, most of the feedback addressed the manner of presenting and the content of the presentations, while less attention was paid to the resources and methods for visualisation or the overall impression. The lack of overall evaluations may be related to the fact that the feedback was contextualised and attached to specific points in the presentation, which facilitated the provision of detailed and concise feedback, but may have hindered the students’ ability to develop an overall view of the presentation and to offer general feedback corresponding to the notions of feed up, feed back and feed forward (Hattie & Timperley, 2007). This finding emphasises the need for teacher educators to put particular effort into revealing the potential of digital technologies to students and presenting the benefits and limitations of video annotation tools for enhancing AfL practices and students’ learning. Second, the types of feedback that the student teachers provided on their peers’ video recorded oral presentations (communication, content, visualisation, overall impression, affirmation and advice for improvement) indicate that teacher educators and student teachers need to develop a profound understanding of the AfL principles (Black & William, 1998) to be able to integrate them into their teaching practices. For example, to improve the quality of feedback, teacher educators should emphasise feedback that involves advice for improvement rather than praise and affirmation of students’ actions. It seems important to offer students guidance about how to give constructive advice for further improvement and to help them overcome the anxiety that they experience when providing feedback. Development of feedback-giving skills, as also suggested by Gamlem and Munthe (2014), may contribute to the student teachers’ capability to provide constructive feedback in their future classrooms and reduce affirming and encouraging advice aimed to create a positive and friendly atmosphere in classrooms.

Third, the student teachers confirmed the educational value of peer feedback via video annotation by describing it as an informative process that enhanced their reflections, contributed to learning and fostered the development of fundamentally important skills for the teacher profession. The students emphasised that the video annotation tool Studio helped to contextualise feedback and made feedback concise because it was anchored to specific moments in the students’ presentations, as Butler et al. (2006) suggested. This implies that student teachers may benefit from teacher educators’ employment of learning designs that combine video annotation and peer feedback.

Therefore, our findings inform teacher educators, educational practitioners and researchers about the types of feedback that student teachers provided when using the video annotation tool Studio to examine their peers’ oral presentations. The findings also emphasise the crucial importance of teacher educators’ awareness of the potential, benefits and limitations of this tool for enhancing pedagogy, students’ reflection and the development of students as learners.

More research is needed to examine how student teachers use video annotation in the feedback-giving process to improve AfL practices and to enhance students’ reflections and learning.

Litteraturhenvisninger

Alley, K., & King, J. (2015). Capturing quality practice: Annotated video-based portfolios and graduate students’ reflective thinking. In E. Ortlieb, M. B. Mcvee, & L. E. Shanahan (Eds.), Video reflection in literacy teacher education and development: Lessons from research and practice (Vol. 5, pp. 279‒295). Emerald Publishing. https://doi.org/10.1108/S2048-045820150000005020

Anderson, K., Kennedy-Clark, S., & Galstaun, V. (2012). Using Video Feedback and Annotations to Develop ICT Competency in Pre-Service Teacher Education (paper presentation). Joint Australian Association for Research in Education and Asia-Pacific Educational Research Association Conference (AARE-APERA) and World Education Research Association (WERA) Focal Meeting Dec 2‒6, Sydney, New South Wales, Australia.

Anderson, T., & Shattuck, J. (2012). Design-based research: A decade of progress in education research? Educational Researcher, 41(1), 16‒25. https://doi.org/10.3102/0013189x11428813

Black, P., & Wiliam, D. (1998). Assessment and classroom learning. Assessment in Education: Principles, Policy & Practice, 5(1), 7‒74. https://doi.org/10.1080/0969595980050102

Brooks, P. (2012). Dancing with the Web: Students bring meaning to the semantic web. Technology, Pedagogy and Education, 21(2), 189‒212. https://doi.org/10.1080/1475939X.2012.697400

Brouwer, N., & Robijns, F. (2014). In search of effective guidance for preservice teachers’ viewing of classroom video. In B. Calandra & P. Rich (Eds.), Digital video for teacher education (pp. 62‒76). Routledge.

Butler, M., Zapart, T., & Li, R. (2006). Video annotation – Improving assessment of transient educational events. In E. Cohen (Ed.), Proceedings of the 2006 InSITE Conference (Vol. 6, pp. 19–26). Informing Science Institute. https://doi.org/10.28945/3019

Calandra, B., & Rich, P. J. (2015). Digital video for teacher education: Research and practice. Routledge.

Castleberry, A., & Nolen, A. (2018). Thematic analysis of qualitative research data: Is it as easy as it sounds? Currents in Pharmacy Teaching and Learning, 10(6), 807‒815. https://doi.org/10.1016/j.cptl.2018.03.019

Cebrián de la Serna, M., Bartolomé Pina, A.-R., Cebrián Robles, D., & Ruiz Torres, M. (2015). Study of the portfolios in the practicum: Analysis of a PLE-portfolio. Relieve: Revista Electrónica de Investigación y Evaluación Educativa, 21(2), 1‒18.

Chieu, V. M., Kosko, K. W., & Herbst, P. G. (2015). An analysis of evaluative comments in teachers’ online discussions of representations of practice. Journal of Teacher Education, 66(1), 35‒50. https://doi.org/10.1177/0022487114550203

Cho, K., & MacArthur, C. (2011). Learning by reviewing. Journal of Educational Psychology, 103(1), 73‒84. https://doi.org/10.1037/a0021950

Cho, Y. H., & Cho, K. (2011). Peer reviewers learn from giving comments. Instructional Science, 39(5), 629‒643. https://doi.org/10.1007/s11251-010-9146-1

Clarke, V., & Braun, V. (2014). Thematic analysis. In A. C. Michalos (Ed.), Encyclopedia of quality of life and well-being research (pp. 6626‒6628). Springer Netherlands.

Coffey, A. M. (2014). Using video to develop skills in reflection in teacher education students. Australian Journal of Teacher Education, 39(9), 86‒97. https://doi.org/10.14221/ajte.2014v39n9.7

Colasante, M. (2011). Using video annotation to reflect on and evaluate physical education pre-service teaching practice. Australasian Journal of Educational Technology, 27(1), 66‒88. https://doi.org/10.14742/ajet.983

Creswell, J. W., & Poth, C. N. (2018). Qualitative Inquiry & Research Design: Choosing Among Five Approaches (4th ed., international student ed.). Sage.

Crooks, T. J. (1988). The impact of classroom evaluation practices on students. Review of Educational Research, 58(4), 438‒481. https://doi.org/10.2307/1170281

Ellis, J., McFadden, J., Anwar, T., & Roehrig, G. (2015). Investigating the social interactions of beginning teachers using a video annotation tool. Contemporary Issues in Technology and Teacher Education, 15(3), 404‒421. https://citejournal.org/volume-15/issue-3- 15/general/investigating-the-social-interactions-of-beginning-teachers-using-a-video- annotation-tool/

Falchikov, N. (1995). Peer feedback marking: Developing peer assessment. Innovations in Education and Training International, 32(2), 175‒187. https://doi.org/10.1080/1355800950320212

Ferguson, P. (2011). Student perceptions of quality feedback in teacher education. Assessment & Evaluation in Higher Education, 36(1), 51‒62. https://doi.org/10.1080/02602930903197883

Gamlem, S. M., & Munthe, E. (2014). Mapping the quality of feedback to support students’ learning in lower secondary classrooms. Cambridge Journal of Education, 44(1), 75‒92. https://doi.org/10.1080/0305764X.2013.855171

Gaudin, C., & Chaliès, S. (2015). Video viewing in teacher education and professional development: A literature review. Educational Research Review, 16, 41‒67. https://doi.org/10.1016/j.edurev.2015.06.001

Hamel, C., & Viau-Guay, A. (2019). Using video to support teachers’ reflective practice: A literature review. Cogent Education, 6(1). https://doi.org/10.1080/2331186X.2019.1673689

Harris, L. R., Brown, G. T. L., & Harnett, J. A. (2015). Analysis of New Zealand primary and secondary student peer- and self-assessment comments: Applying Hattie and Timperley’s feedback model. Assessment in Education: Principles, Policy & Practice, 22(2), 265‒281. https://doi.org/10.1080/0969594x.2014.976541

Hattie, J. (2009). Visible learning: A synthesis of over 800 meta-analyses relating to achievement. Routledge. https://doi.org/10.4324/9780203887332

Hattie, J., & Timperley, H. (2007). The power of feedback. Review of Educational Research, 77(1), 81‒112. https://doi.org/10.3102/003465430298487

Higgins, R., Hartley, P., & Skelton, A. (2002). The conscientious consumer: Reconsidering the role of assessment feedback in student learning. Studies in Higher Education, 27(1), 53‒64. https://doi.org/10.1080/03075070120099368

Igland, M.-A. (2008). Mens teksten blir til: Ein kasusstudie av lærarkommentarar til utkast [While the text comes into being: A case study of teacher feedback on drafts]. Unipub.

Igland, M.-A., & Østrem, S. E. (2019). 7. Mens teksten blir til: digital respons til elevtekstar under arbeid [7. While the text comes into being: digital feedback on pupils’ texts in progress]. In M.-A. Igland, A. Skaftun, & D. Husebø (Eds.), Ny hverdag? [A new everyday?] (pp. 170–194). Universitetsforlaget. https://doi.org/10.18261/9788215031606- 2019-09

Kluger, A. N., & DeNisi, A. (1996). The effects of feedback interventions on performance: A historical review, a meta-analysis, and a preliminary feedback intervention theory. Psychological Bulletin, 119(2), 254‒284. https://doi.org/10.1037/0033-2909.119.2.254

Krammer, K., Ratzka, N., Klieme, E., Lipowsky, F., Pauli, C., & Reusser, K. (2006). Learning with classroom videos: Conception and first results of an online teacher-training program. ZDM, 38(5), 422‒432. https://doi.org/10.1007/BF02652803

Kulik, J. A., & Kulik, C.-L. C. (1988). Timing of feedback and verbal learning. Review of Educational Research, 58(1), 79‒97. https://doi.org/10.3102/00346543058001079

Kvale, S., Brinkmann, S., Anderssen, T. M., & Rygge, J. (2017). Det kvalitative forskningsintervju [The qualitative research interview] (3rd ed.). Gyldendal akademisk.

Körkkö, M., Morales Rios, S., & Kyrö-Ämmälä, O. (2019). Using a video app as a tool for reflective practice. Educational Research, 61(1), 22‒37. https://doi.org/10.1080/00131881.2018.1562954

Li, J., & De Luca, R. (2014). Review of assessment feedback. Studies in Higher Education, 39(2), 378‒393. https://doi.org/10.1080/03075079.2012.709494

Li, L., Liu, X., & Steckelberg, A. L. (2010). Assessor or assessee: How student learning improves by giving and receiving peer feedback. British Journal of Educational Technology, 41(3), 525‒536. https://doi.org/10.1111/j.1467-8535.2009.00968.x

Li, L., Steckelberg, A. L., & Srinivasan, S. (2008). Utilizing peer interactions to promote learning through a web-based peer assessment system. Canadian Journal of Learning and Technology / La revue canadienne de l’apprentissage et de la technologie, 34(2). https://doi.org/10.21432/T21C7R

Luo, G., & Pang, Y. (2010). Video annotation for enhancing blended learning of physical education. In 2010 International Conference on Artificial Intelligence and Education (ICAIE) (pp. 761‒764). IEEE. https://doi.org/10.1109/ICAIE.2010.5641475

Mayer, R. E. (2020). Multimedia learning (3rd ed.). Cambridge University Press. https://doi.org/10.1017/9781316941355

McFadden, J., Ellis, J., Anwar, T., & Roehrig, G. (2014). Beginning science teachers’ use of a digital video annotation tool to promote reflective practices. Journal of Science Education and Technology, 23(3), 458‒470. https://doi.org/10.1007/s10956-013-9476-2

Natriello, G. (1987). The impact of evaluation processes on students. Educational Psychologist, 22(2), 155‒175. https://doi.org/10.1207/s15326985ep2202_4

Nicol, D., Thomson, A., & Breslin, C. (2014). Rethinking feedback practices in higher education: A peer review perspective. Assessment & Evaluation in Higher Education, 39(1), 102‒122. https://doi.org/10.1080/02602938.2013.795518

Nulty, D. D. (2008). The adequacy of response rates to online and paper surveys: What can be done? Assessment & Evaluation in Higher Education, 33(3), 301‒314. https://doi.org/10.1080/02602930701293231

Penne, S., & Hertzberg, F. (2020). Vurdering av muntlige tekster [Assessment of oral texts]. In S. Penne & F. Hertzberg (Eds.), Muntlige tekster i klasserommet [Oral texts in the classroom] (2nd ed., pp. 102‒115). Universitetsforlaget.

Pérez-Torregrosa, A. B., Díaz-Martín, C., & Ibáñez-Cubillas, P. (2017). The use of video annotation tools in teacher training. Procedia – Social and Behavioral Sciences, 237, 458‒464. https://doi.org/10.1016/j.sbspro.2017.02.090

Pokorny, H., & Pickford, P. (2010). Complexity, cues and relationships: Student perceptions of feedback. Active Learning in Higher Education, 11(1), 21‒30. https://doi.org/10.1177/1469787409355872

Pope, N. (2001). An examination of the use of peer rating for formative assessment in the context of the theory of consumption values. Assessment & Evaluation in Higher Education, 26(3), 235‒246. https://doi.org/10.1080/02602930120052396

ProTed (2019). Studieintensive arbeidsformer ved implementering av femårige grunnskolelærerutdanninger (STIL) [Teaching methods to intensify studying in initial teacher education]. Centre for Professional Learning in Teacher Education, University of Oslo. https://www.uv.uio.no/proted/utviklingsomrader/stil-sluttrapport-january-2019.pdf

Rich, P. J., & Hannafin, M. (2009). Video annotation tools: Technologies to scaffold, structure, and transform teacher reflection. Journal of Teacher Education, 60(1), 52‒67. https://doi.org/10.1177/0022487108328486

Rich, P. J., & Trip, T. (2011). Ten essential questions educators should ask when using video annotation tools. TechTrends, 55(6), 16‒24. https://doi.org/10.1007/s11528-011-0537-1

Roehrig, G., Donna, J., Hoelscher, M., & Billington, B. (2015). Design of online induction programs to promote reform-based science and mathematics teaching. Teacher Education and Practice, 28(23), 458‒470.

Sadler, D. R. (1998). Formative assessment: Revisiting the territory. Assessment in Education: Principles, Policy & Practice, 5(1), 77‒84. https://doi.org/10.1080/0969595980050104

Santagata, R., & Angelici, G. (2010). Studying the impact of the lesson analysis framework on preservice teachers’ abilities to reflect on videos of classroom teaching. Journal of Teacher Education, 61(4), 339‒349. https://doi.org/10.1177/0022487110369555

Santagata, R., Zannoni, C., & Stigler, J. W. (2007). The role of lesson analysis in pre-service teacher education: An empirical investigation of teacher learning from a virtual video-based field experience. Journal of Mathematics Teacher Education, 10(2), 123‒140. https://doi.org/10.1007/s10857-007-9029-9

Schön, D. (1983). The reflective practitioner: How professionals think in action. Basic Books.

Schön, D. A. (1987). Educating the reflective practitioner: Toward a new design for teaching and learning in the professions. Jossey-Bass.

Seidel, T., Blomberg, G., & Renkl, A. (2013). Instructional strategies for using video in teacher education. Teaching and Teacher Education, 34, 56‒65. https://doi.org/10.1016/j.tate.2013.03.004

Seidel, T., Stürmer, K., Blomberg, G., Kobarg, M., & Schwindt, K. (2011). Teacher learning from analysis of videotaped classroom situations: Does it make a difference whether teachers observe their own teaching or that of others? Teaching and Teacher Education, 27(2), 259‒267. https://doi.org/10.1016/j.tate.2010.08.009

Stockero, S. L. (2008). Using a video-based curriculum to develop a reflective stance in prospective mathematics teachers. Journal of Mathematics Teacher Education, 11(5), 373‒394. https://doi.org/10.1007/s10857-008-9079-7

Topping, K. (1998). Peer assessment between students in colleges and universities. Review of Educational Research, 68(3), 249‒276. https://doi.org/10.3102/00346543068003249

Topping, K. (2009). Peers as source of formative assessment. In H. Andrade & G. J. Cizek (Eds.), Handbook of formative assessment (pp. 61‒74). Taylor & Francis Group.

Topping, K. J., Smith, E. F., Swanson, I., & Elliot, A. (2000). Formative peer assessment of academic writing between postgraduate students. Assessment & Evaluation in Higher Education, 25(2), 149‒169. https://doi.org/10.1080/713611428

Tülüce, H. S. (2018). Using a web-based video annotation tool in pre-service language teacher education: Affordances and constraints (paper presentation). The European Conference on Language Learning, June 29 – July 1, Istanbul, Turkey.

Utdanningsdirektoratet (2019). Erfaringer fra nasjonal satsing på vurdering for læring (2010–2018) [Experiences from the national priority programme for assessment for learning]. https://www.udir.no/tall-og-forskning/finn-forskning/rapporter/erfaringer-fra- nasjonal-satsing-pa-vurdering-for-laring-2010-2018/

Van Den Berg, I., Admiraal, W., & Pilot, A. (2006). Designing student peer assessment in higher education: Analysis of written and oral peer feedback. Teaching in Higher Education, 11(2), 135‒147. https://doi.org/10.1080/13562510500527685

van Es, E. A., & Sherin, M. G. (2002). Learning to notice: Scaffolding new teachers’ interpretations of classroom interactions. Journal of Technology and Teacher Education, 10(4), 571‒596. https://www.semanticscholar.org/paper/Learning-to-Notice-%3A- Scaffolding-New-Teachers-%E2%80%99-of-ELIZABETHA.VAN/c253d8a8436583754a70c862ddea603366d71665

van Es, E. A. (2009). Participants’ roles in the context of a video club. Journal of the Learning Sciences, 18(1), 100‒137. https://doi.org/10.1080/10508400802581668

van Es, E. A., Cashen, M., Barnhart, T., & Auger, A. (2017). Learning to notice mathematics instruction: Using video to develop preservice teachers’ vision of ambitious pedagogy. Cognition and Instruction, 35(3), 165‒187. https://doi.org/10.1080/07370008.2017.1317125

Wibeck, V. (2010). Fokusgrupper – Om fokuserade gruppintervjuer som undersökningsmetod [Focus groups – About focused group interviews as research method] (2nd ed.). Studentlitteratur AB.

Zhang, M., Lundeberg, M., Koehler, M. J., & Eberhardt, J. (2011). Understanding affordances and challenges of three types of video for teacher professional development. Teaching and Teacher Education, 27(2), 454‒462. https://doi.org/10.1016/j.tate.2010.09.015